|

6

Security

& Monitoring

In a

traditional wired network,

access control is very

straightforward: If a

person

has physical access to a

computer or network hub,

they can use

(or

abuse)

the network resources. While

software mechanisms are an

impor-

tant

component of network security,

limiting physical access to

the network

devices

is the ultimate access

control mechanism. Simply

put, if all termi-

nals

and network components are

only accessible to trusted

individuals, the

network

can likely be

trusted.

The

rules change significantly

with wireless networks.

While the

apparent

range

of your access point may

seem to be just a few

hundred meters, a

user

with

a high gain antenna may be

able to make use of the

network from several

blocks

away. Should an unauthorized

user be detected, is impossible to

sim-

ply

"trace the cable" back to

the user s

location.

Without transmitting a

single

packet,

a nefarious user can even

log all network data to

disk. This data

can

later

be used to launch a more

sophisticated attack against

the network.

Never

assume that radio waves

simply "stop" at the edge of

your property line.

It

is usually unreasonable to completely

trust all users of the

network, even on

wired

networks. Disgruntled employees,

uneducated network users,

and sim-

ple

mistakes on the part of

honest users can cause

significant harm to

network

operations.

As the network architect,

your goal is to facilitate

private commu-

nication

between legitimate users of

the network. While a certain

amount of

access

control and authentication is

necessary in any network,

you have failed

in

your job if legitimate users

find it difficult to use the

network to communicate.

There

s an old

saying that the only

way to completely secure a

computer is to

unplug

it, lock it in a safe,

destroy the key, and

bury the whole thing in

con-

157

158

Chapter

6: Security & Monitoring

crete.

While such a system might be

completely "secure", it is useless

for

communication.

When you make security

decisions for your network,

re-

member

that above all else,

the network exists so that

its users can

commu-

nicate

with each other. Security

considerations are important,

but should not

get

in the way of the network

s users.

Physical

security

When

installing a network, you

are building an infrastructure

that people de-

pend

on. Security measures exist

to ensure that the network

is reliable. For

many

installations, outages often

occur due to human

tampering, whether

accidental

or not. Networks have

physical components, such as

wires and

boxes,

which are easily disturbed.

In many installations, people

will not un-

derstand

the purpose of the installed

equipment, or curiosity may

lead them

to

experiment. They may not

realize the importance of a

cable connected to

a

port. Someone may unplug an

Ethernet cable so that they

can connect

their

laptop for 5 minutes, or

move a switch because it is in

their way. A plug

might

be removed from a power bar

because someone needs that

recepta-

cle.

Assuring the physical

security of an installation is paramount.

Signs and

labels

will only be useful to those

who can read your

language. Putting

things

out

of the way and limiting

access is the best means to

assure that accidents

and

tinkering do not

occur.

In

less developed economies,

proper fasteners, ties, or

boxes will not be as

easy

to find.

You should be able to find electrical

supplies that will work

just

as

well. Custom enclosures are

also easy to manufacture and

should be

considered

essential to any installation. It is

often economical to pay a

mason

to

make holes and install

conduit. Where this would be

an expensive option

in

the developed world, this

type of labour intensive

activity can be

affordable

in

Southern countries. PVC can

be embedded in cement walls

for passing

cable

from room to room. This

avoids the need to smash

new holes every

time

a cable needs to be passed.

Plastic bags can be stuffed

into the conduit

around

the cables for

insulation.

Small

equipment should be mounted on

the wall and larger

equipment

should

be put in a closet or in a

cabinet.

Switches

Switches,

hubs or interior access

points can be screwed

directly onto a wall

with

a wall plug. It is best to

put this equipment as high

as possible to reduce

the

chance that someone will

touch the device or its

cables.

Chapter

6: Security & Monitoring

159

Cables

At

the very least, cables

should be hidden and fastened. It is

possible to find

plastic

cable conduit that can be

used in buildings. If you cannot

find it, simple

cable

attachments can be nailed

into the wall to secure

the cable. This will

make

sure

that the cable doesn't

hang where it can be

snagged, pinched or

cut.

It

is preferable to bury cables,

rather than to leave them

hanging across a

yard.

Hanging wires might be used

for drying clothes, or be

snagged by a

ladder,

etc. To avoid vermin and

insects, use plastic

electrical conduit.

The

marginal

expense will be well worth

the trouble. The conduit

should be buried

about

30 cm deep, or below the

frost level in cold

climates. It is worth

the

extra

investment of buying larger

conduit than is presently

required, so that

future

cables can be run through

the same tubing. Consider

labeling buried

cable

with a "call before you

dig" sign to avoid future

accidental outages.

Power

It

is best to have power bars

locked in a cabinet. If that is

not possible, mount

the

power bar under a desk, or

on the wall and use

duct tape (or gaffer tape,

a

strong

adhesive tape) to secure the

plug into the receptacle. On

the UPS and

power

bar, do not leave any

empty receptacles. Tape them

if necessary. Peo-

ple

will have the tendency to

use the easiest receptacle,

so make these

critical

ones

difficult to use. If you do

not, you might find a

fan or light plugged

into

your

UPS; though it is nice to

have light, it is nicer to

keep your server

running!

Water

Protect

your equipment from water

and moisture. In all cases

make sure that

your

equipment, including your

UPS is at least 30 cm from

the ground, to

avoid

damage from flooding.

Also try to have a roof

over your equipment,

so

that

water and moisture will

not fall onto it. In

moist climates, it is

important

that

the equipment has proper

ventilation to assure that

moisture can be ex-

hausted.

Small closets need to have

ventilation, or moisture and

heat can

degrade

or destroy your gear.

Masts

Equipment

installed on a mast is often

safe from thieves.

Nevertheless, to de-

ter

thieves and to keep your

equipment safe from winds it

is good to over-

engineer

mounts. Painting equipment a

dull white or grey color

reflects the sun

and

makes it look plain and

uninteresting. Panel antennas

are often preferred

because

they are much more

subtle and less interesting

than dishes. Any

in-

stallation

on walls should be high

enough to require a ladder to

reach. Try

choosing

well-lit but not prominent

places to put equipment.

Also avoid anten-

160

Chapter

6: Security & Monitoring

nae

that resemble television

antennae, as those are items

that will attract

in-

terest

by thieves, where a wifi

antenna will be useless to

the average thief.

Threats

to the network

One

critical difference between

Ethernet and wireless is

that wireless

networks

are

built on a shared

medium. They

more closely resemble the

old network

hubs

than modern switches, in

that every computer

connected to the

network

can

"see" the traffic of every

other user. To monitor all

network traffic on an

access

point, one can simply

tune to the channel being

used, put the

network

card

into monitor mode, and

log every frame. This

data might be directly

valu-

able

to an eavesdropper (including data

such as email, voice data,

or online

chat

logs). It may also provide

passwords and other

sensitive data, making

it

possible

to compromise the network

even further. As we ll see

later in this

chapter,

this problem can be

mitigated by the use of

encryption.

Another

serious problem with

wireless networks is that

its users are

relatively

anonymous. While it is

true that every wireless

device includes a

unique

MAC

address that is supplied by

the manufacturer, these

addresses can of-

ten

be changed with software.

Even when the MAC

address is known, it

can

be

very difficult to

judge where a wireless user

is physically located.

Multi-

path

effects, high-gain antennas,

and widely varying radio

transmitter charac-

teristics

can make it impossible to

determine if a malicious wireless

user is

sitting

in the next room or is in an

apartment building a mile

away.

While

unlicensed spectrum provides a

huge cost savings to the

user, it has

the

unfortunate side effect that

denial

of service (DoS) attacks

are trivially

simple.

By simply turning on a high

powered access point,

cordless phone,

video

transmitter, or other 2.4 GHz

device, a malicious person

could cause

significant

problems on the network.

Many network devices are

vulnerable to

other

forms of denial of service

attacks as well, such as

disassociation flood-

ing

and ARP table

overflows.

Here

are several categories of

individuals who may cause

problems on a

wireless

network:

ˇ

Unintentional

users. As more

wireless networks are

installed in densely

populated

areas, it is common for

laptop users to accidentally

associate to

the

wrong network. Most wireless

clients will simply choose

any available

wireless

network when their preferred

network is unavailable. The

user

may

then make use of this

network as usual, completely

unaware that they

may

be transmitting sensitive data on

someone else s

network.

Malicious

people

may even take advantage of

this by setting up access

points in stra-

tegic

locations, to try to attract

unwitting users and capture

their data.

Chapter

6: Security & Monitoring

161

The

first

step in avoiding this

problem is educating your

users, and stress-

ing

the importance of connecting

only to known and trusted

networks.

Many

wireless clients can be

configured to

only connect to trusted

net-

works,

or to ask permission before

joining a new network. As we

will see

later

in this chapter, users can

safely connect to open

public networks by

using

strong encryption.

ˇ

War

drivers. The

"war driving" phenomenon

draws its name from

the

popular

1983 hacker film,

"War Games". War drivers

are interested in find-

ing

the physical location of

wireless networks. They

typically drive

around

with

a laptop, GPS, and

omnidirectional antenna, logging

the name and

location

of any networks they find.

These logs are then

combined with logs

from

other war drivers, and

are turned into graphical

maps depicting the

wireless

"footprint" of a particular

city.

The

vast majority of war drivers

likely pose no direct threat

to networks, but

the

data they collect might be

of interest to a network cracker.

For example,

it

might be obvious that an

unprotected access point

detected by a war

driver

is

located inside a sensitive

building, such as a government or

corporate of-

fice.

A malicious person could use

this information to illegally

access the

network

there. Arguably, such an AP

should never have been

set up in the

first

place, but war driving

makes the problem all

the more urgent. As we

will

see

later in this chapter, war

drivers who use the

popular program

NetStum-

bler

can be detected with

programs such as Kismet. For

more information

about

war driving, see sites

such as http://www.wifimaps.com/,

http://www.nodedb.com/,

or http://www.netstumbler.com/

.

ˇ

Rogue

access points. There

are two general classes of

rogue access

points:

those incorrectly installed by

legitimate users, and those

installed

by

malicious people who intend

to collect data or do harm to

the network.

In

the simplest case, a

legitimate network user may

want better wireless

coverage

in their office, or

they might find security

restrictions on the

corpo-

rate

wireless network too

difficult to

comply with. By installing an

inexpen-

sive

consumer access point

without permission, the user

opens the entire

network

up to potential attacks from

the inside. While it is

possible to scan

for

unauthorized access points on

your wired network, setting

a clear policy

that

prohibits them is very

important.

The

second class of rogue access

point can be very difficult

to deal with. By

installing

a high powered AP that uses

the same ESSID as an

existing net-

work,

a malicious person can trick

people into using their

equipment, and log

or

even manipulate all data

that passes through it.

Again, if your users

are

trained

to use strong encryption,

this problem is significantly

reduced.

ˇ

Eavesdroppers. As

mentioned earlier, eavesdropping is a

very difficult

problem

to deal with on wireless

networks. By using a passive

monitoring

tool

(such as Kismet), an eavesdropper

can log all network

data from a

great

distance away, without ever

making their presence known.

Poorly

162

Chapter

6: Security & Monitoring

encrypted

data can simply be logged

and cracked later, while

unencrypted

data

can be easily read in real

time.

If

you have difficulty

convincing others of this

problem, you might want

to

demonstrate

tools such as Etherpeg

(http://www.etherpeg.org/) or

Driftnet

(http://www.ex-parrot.com/~chris/driftnet/). These

tools watch a

wireless

network

for graphical data, such as

GIF and JPEG files.

While other users

are

browsing the Internet, these

tools simply display all

graphics found in a

graphical

collage. I often use tools

such as this as a demonstration

when

lecturing

on wireless security. While

you can tell a user

that their email is

vulnerable

without encryption, nothing

drives the message home

like show-

ing

them the pictures they

are looking at in their web

browser.

Again,

while it cannot be completely

prevented, proper application of

strong

encryption

will discourage

eavesdropping.

This

introduction is intended to give

you an idea of the problems

you are up

against

when designing a wireless

network. Later in this

chapter, we will look

at

tools and techniques that

will help you to mitigate

these problems.

Authentication

Before

being granted access to

network resources, users

should first

be

authenticated. In an ideal

world, every wireless user

would have an identi-

fier that is

unique, unchangeable, and

cannot be impersonated by other

us-

ers.

This turns out to be a very

difficult

problem to solve in the real

world.

The

closest feature we have to a

unique identifier is the

MAC address. This

is

the 48-bit number assigned

by the manufacturer to every

wireless and

Ethernet

device. By employing mac

filtering

on our

access points, we can

authenticate

users based on their MAC

address. With this feature,

the ac-

cess

point keeps an internal

table of approved MAC

addresses. When a

wireless

user tries to associate to

the access point, the

MAC address of the

client

must be on the approved

list, or the association

will be denied.

Alter-

nately,

the AP may keep a table of

known "bad" MAC addresses,

and permit

all

devices that are not on

the list.

Unfortunately,

this is not an ideal

security mechanism. Maintaining

MAC ta-

bles

on every device can be

cumbersome, requiring all

client devices to

have

their

MAC addresses recorded and

uploaded to the APs. Even

worse, MAC

addresses

can often be changed in

software. By observing MAC

addresses

in

use on a wireless network, a

determined attacker can

spoof

(impersonate)

an

approved MAC address and

successfully associate to the

AP. While MAC

filtering

will prevent unintentional

users and even most

curious individuals

from

accessing the network, MAC

filtering

alone cannot prevent attacks

from

determined

attackers.

Chapter

6: Security & Monitoring

163

MAC

filters are useful for

temporarily limiting access

from misbehaving

clients.

For

example, if a laptop has a

virus that sends large

amounts of spam or

other

traffic,

its MAC address can be

added to the filter table to

stop the traffic

imme-

diately.

This will buy you

time to track down the

user and fix the

problem.

Another

popular authentication feature of

wireless the so-called

closed

net-

work. In a typical

network, APs will broadcast

their ESSID many times

per

second,

allowing wireless clients

(as well as tools such as

NetStumbler) to

find the

network and display its

presence to the user. In a

closed network,

the

AP does not beacon the

ESSID, and users must

know the full name

of

the

network before the AP will

allow association. This

prevents casual users

from

discovering the network and

selecting it in their wireless

client.

There

are a number of drawbacks to

this feature. Forcing users

to type in

the

full ESSID before connecting

to the network is error

prone and often

leads

to support calls and

complaints. Since the

network isn t

obviously

pre-

sent

in site survey tools like

NetStumbler, this can

prevent your networks

from

showing up on war driving

maps. But it also means

that other network

builders

cannot easily find your

network either, and

specifically

won t

know

that

you are already using a

given channel. A conscientious

neighbor may

perform

a site survey, see no nearby

networks, and install their

own network

on

the same channel you

are using. This will

cause interference

problems

for

both you and your

neighbor.

Finally,

using closed networks

ultimately adds little to

your overall networks

security.

By using passive monitoring

tools (such as Kismet), a

skilled user

can

detect frames sent from

your legitimate clients to

the AP. These

frames

necessarily

contain the network name. A

malicious user can then

use this

name

to associate to the access

point, just like a normal

user would.

Encryption

is probably the best tool we

have for authenticating

wireless us-

ers.

Through strong encryption, we

can uniquely identify a user

in a manner

that

is very difficult to

spoof, and use that

identity to determine further

net-

work

access. Encryption also has

the benefit of adding a

layer of privacy by

preventing

eavesdroppers from easily

watching network traffic.

The

most widely employed

encryption method on wireless

networks is WEP

encryption. WEP

stands for wired

equivalent privacy, and is

supported by

virtually

all 802.11a/b/g equipment.

WEP uses a shared 40-bit

key to encrypt

data

between the access point

and client. The key

must be entered on

the

APs

as well as on each of the

clients. With WEP enabled,

wireless clients

cannot

associate with the AP until

they use the correct

key. An eavesdropper

listening

to a WEP-enabled network will

still see traffic and

MAC addresses,

but

the data payload of each

packet is encrypted. This

provides a fairly

good

authentication

mechanism while also adding

a bit of privacy to the

network.

164

Chapter

6: Security & Monitoring

WEP

is definitely

not the strongest encryption

solution available. For

one

thing,

the WEP key is shared

between all users. If the

key is compromised

(say,

if one user tells a friend

what the password is, or if

an employee is let

go)

then changing the password

can be prohibitively difficult,

since all APs

and

client devices need to be

changed. This also means

that legitimate us-

ers

of the network can still

eavesdrop on each others

traffic, since

they all

know

the shared key.

The

key itself is often poorly

chosen, making offline

cracking attempts

feasi-

ble.

Even worse, the

implementation of WEP itself is

broken in many access

points,

making it even easier to

crack some networks. While

manufacturers

have

implemented a number of extensions to

WEP (such as longer keys

and

fast

rotation schemes), these

extensions are not part of

the standard, and

generally

will not interoperate

between equipment from

different manufactur-

ers.

By upgrading to the most

recent firmware

for all of your wireless

de-

vices,

you can prevent some of

the early attacks found in

WEP.

WEP

can still be a useful

authentication tool. Assuming

your users can be

trusted

not to give away the

password, you can be fairly

sure that your

wire-

less

clients are legitimate.

While WEP cracking is

possible, it is beyond

the

skill

of most users. WEP is quite

useful for securing long

distance point-to-

point

links, even on generally

open networks. By using WEP

on such a link,

you

will discourage others from

associating to the link, and

they will likely

use

other

available APs instead. Think

of WEP as a handy "keep out"

sign for

your

network. Anyone who

detects the network will

see that a key is

re-

quired,

making it clear that they

are not welcome to use

it.

WEPs

greatest strength is its

interoperability. In order to

comply with the

802.11

standards, all wireless

devices support basic WEP.

While it isn t

the

strongest

method available, it is certainly

the most commonly

implemented

encryption

feature. We will look at

other more advanced

encryption techniques

later

in this chapter.

For

more details about the

state of WEP encryption, see

these papers:

ˇ

http://www.isaac.cs.berkeley.edu/isaac/wep-faq.html

ˇ

http://www.cs.umd.edu/~waa/wireless.pdf

ˇ

http://www.crypto.com/papers/others/rc4_ksaproc.ps

Another

data-link layer authentication

protocol is Wi-Fi

Protected Access, or

WPA. WPA

was created specifically to

deal with the known

problems with

WEP

mentioned earlier.

It

provides a significantly

stronger encryption

scheme,

and can use a shared

private key, unique keys

assigned to each

user,

or even SSL certificates to

authenticate both the client

and the access

point.

Authentication credentials are

checked using the 802.1X

protocol,

Chapter

6: Security & Monitoring

165

which

can consult a third party

database such as RADIUS.

Through the use

of

Temporal

Key Integrity Protocol

(TKIP), keys

can be rotated

quickly

over

time, further reducing the

likelihood that a particular

session can be

cracked.

Overall, WPA provides

significantly

better authentication and

pri-

vacy

than standard WEP.

WPA

requires fairly recent

access point hardware and

up-to-date firmware

on

all

wireless clients, as well as a

substantial amount of configuration.

If you

are

installing a network in a setting

where you control the

entire hardware

platform,

WPA can be ideal. By

authenticating both clients

and APs, it solves

the

rogue access point problem

and provides many

significant

advantages

over

WEP. But in most network

settings where the vintage

of hardware is

mixed

and the knowledge of

wireless users is limited,

WPA can be a night-

mare

to install. It is for this

reason that most sites

continue to use WEP,

if

encryption

is used at all.

Captive

portals

One

common authentication tool

used on wireless networks is

the captive

portal. A captive

portal uses a standard web

browser to give a wireless

user

the

opportunity to present login

credentials. It can also be

used to present

information

(such as an Acceptable Use

Policy) to the user before

granting

further

access. By using a web

browser instead of a custom

program for

authentication,

captive portals work with

virtually all laptops and

operating

systems.

Captive portals are

typically used on open

networks with no

other

authentication

methods (such as WEP or MAC

filters).

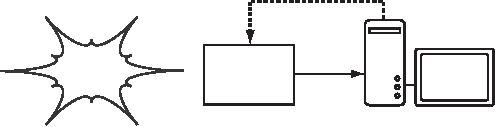

To

begin, a wireless user opens

their laptop and selects

the network. Their

computer

requests a DHCP lease, which

is granted. They then use

their web

browser

to go to any site on the

Internet.

http://google.com/

Captive

Internet

portal

Login:

Figure

6.1: The user requests a

web page and is

redirected.

Instead

of receiving the requested

page, the user is presented

with a login

screen.

This page can require

the user to enter a user

name and password,

simply

click a "login" button, type

in numbers from a pre-paid

ticket, or enter

any

other credentials that the

network administrators require.

The user then

166

Chapter

6: Security & Monitoring

enters

their credentials, which are

checked by the access point

or another

server

on the network. All other

network access is blocked

until these cre-

dentials

are verified.

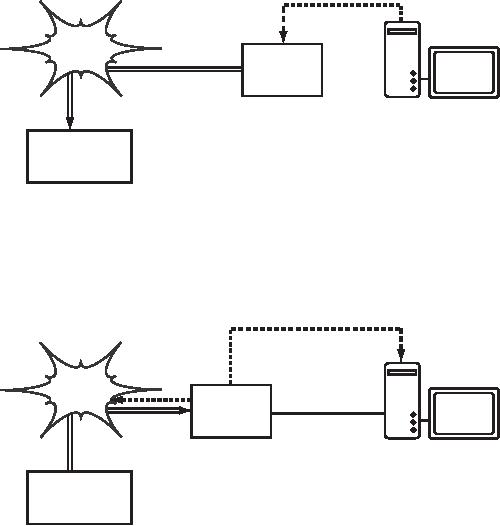

HTTP

request waiting

Internet

Captive

portal

User:

joe

Password:

secret

Authentication

service

Figure

6.2: The user's credentials

are verified

before further network

access is

granted.

The authentication server

can be the access point

itself, another

machine

on

the local network, or a

server anywhere on the

Internet.

Once

authenticated, the user is

permitted to access network

resources, and

is

typically redirected to the

site they originally

requested.

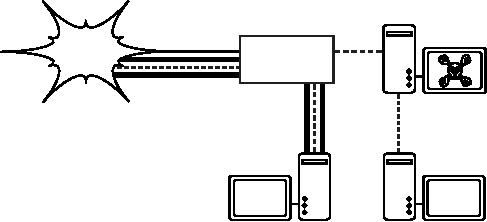

Redirect

to http://google.com/

Internet

Captive

portal

Credentials

http://google.com/

verified.

Authentication

service

Figure

6.3: After authenticating,

the user is permitted to

access the rest

of

the network.

Captive

portals provide no encryption

for the wireless users,

instead relying

on

the MAC and IP address of

the client as a unique

identifier.

Since this is

not

necessarily very secure,

many implementations will

require the user to

re-authenticate

periodically. This can often

be automatically done by

mini-

mizing

a special pop-up browser

window when the user

first

logs in.

Since

they do not provide strong

encryption, captive portals

are not a very

good

choice for networks that

need to be locked down to

only allow access

Chapter

6: Security & Monitoring

167

from

trusted users. They are

much more suited to cafes,

hotels, and other

public

access locations where

casual network users are

expected.

In

public or semi-public network

settings, encryption techniques

such as

WEP

and WPA are effectively

useless. There is simply no

way to distribute

public

or shared keys to members of

the general public without

compromis-

ing

the security of those keys.

In these settings, a simple

application such as

a

captive portal provides a

level of service somewhere

between completely

open

and completely

closed.

Popular

hotspot projects

ˇ

Chillispot (http://www.chillispot.info/).

Chillispot is a captive portal

de-

signed

to authenticate against an existing

user credentials database,

such

as

RADUIS. Combined with the

application phpMyPrePaid, pre-paid

ticket

based

authentication can be implemented

very easily You can

download

phpMyPrePaid

from http://sourceforge.net/projects/phpmyprepaid/.

ˇ

WiFi Dog (http://www.wifidog.org/). WiFi

Dog provides a very

complete

captive

portal authentication package in

very little space (typically

under

30kb).

From a user s

perspective,

it requires no pop-up or javascript

sup-

port,

allowing it to work on a wider

variety of wireless

devices.

ˇ

m0n0wall (http://m0n0.ch/wall/). m0n0wall is

a complete embedded

oper-

ating

system based on FreeBSD. It

includes a captive portal

with RADIUS

support,

as well as a PHP web

server.

ˇ

NoCatSplash (http://nocat.net/download/NoCatSplash/) provides a

cus-

tomizable

splash page to your users,

requiring them to click a

"login" button

before

using the network. This is

useful for identifying the

operators of the

network

and displaying rules for

network access. It provides a

very easy

solution

in situations where you need

to provide users of an open

network

with

information and an acceptable

use policy.

Privacy

Most

users are blissfully unaware

that their private email,

chat conversations,

and

even passwords are often

sent "in the clear"

over dozens of

untrusted

networks

before arriving at their

ultimate destination on the

Internet. How-

ever

mistaken they may be,

users still typically have

some expectation of

privacy

when using computer

networks.

Privacy

can be achieved, even on

untrusted networks such as

public access

points

and the Internet. The

only proven effective method

for protecting pri-

vacy

is the use of strong

end-to-end

encryption.

168

Chapter

6: Security & Monitoring

Encryption

techniques such as WEP and

WPA attempt to address the

privacy

issue

at layer two, the data-link

layer. This does protect

against eavesdrop-

pers

listening in on the wireless

connection, but this

protection ends at

the

access

point. If the wireless

client uses insecure

protocols (such as POP

or

simple

SMTP for receiving and

sending email), then users

beyond the AP

can

still log the session

and see the sensitive

data. As mentioned

earlier,

WEP

also suffers from the

fact that it uses a shared

private key. This

means

that

legitimate wireless users

can eavesdrop on each other,

since they all

know

the private key.

By

using encryption to the

remote end of the

connection, users can

neatly

sidestep

the entire problem. These

techniques work well even on

untrusted

public

networks, where eavesdroppers

are listening and possibly

even ma-

nipulating

data coming from the

access point.

To

ensure data privacy, good

end-to-end encryption should

provide the fol-

lowing

features:

ˇ

Verified

authentication of the remote

end. The

user should be able

to

know

without a doubt that the

remote end is who it claims

to be. Without

authentication,

a user could give sensitive

data to anyone claiming to

be

the

legitimate service.

ˇ

Strong

encryption methods. The

encryption algorithm should

stand up

to

public scrutiny, and not be

easily decrypted by a third

party. There is no

security

in obscurity, and strong

encryption is even stronger

when the algo-

rithm

is widely known and subject

to peer review. A good

algorithm with a

suitably

large and protected key

can provide encryption that

is unlikely to

be

broken by any effort in our

lifetimes using current

technology.

ˇ

Public

key cryptography. While

not an absolute requirement

for end-to-

end

encryption, the use of

public key cryptography

instead of a shared

key

can

ensure that an individual's

data remains private, even

if the key of an-

other

user of the service is

compromised. It also solves

certain problems

with

distributing keys to users

over untrusted

networks.

ˇ

Data

encapsulation. A good

end-to-end encryption mechanism

protects

as

much data as possible. This

can range from encrypting a

single email

transaction

to encapsulation of all IP

traffic, including

DNS lookups and

other

supporting protocols. Some

encryption tools simply

provide a secure

channel

that other applications can

use. This allows users to

run any pro-

gram

they like and still

have the protection of

strong encryption, even if

the

programs

themselves don t

support

it.

Be

aware that laws regarding

the use of encryption vary

widely from place to

place.

Some countries treat

encryption as munitions, and

may require a

permit,

escrow of private keys, or

even prohibit its use

altogether. Before

Chapter

6: Security & Monitoring

169

implementing

any solution that involves

encryption, be sure to verify

that use

of

this technology is permitted in

your local area.

In

the following sections, we ll

take a look at some

specific tools

that can pro-

vide

good protection for your

users data.

SSL

The

most widely available

end-to-end encryption technology is

Secure

Sockets

Layer, known

simply as SSL. Built

into virtually all web

browsers,

SSL

uses public key cryptography

and a trusted public

key infrastructure

(PKI) to secure

data communications on the

web. Whenever you visit a

web

URL

that starts with https,

you are using

SSL.

The

SSL implementation built

into web browsers includes a

collection of cer-

tificates

from trusted sources, called

certificate

authorities (CA).

These

certificates

are cryptographic keys that

are used to verify the

authenticity of

websites.

When you browse to a website

that uses SSL, the

browser and

the

server first

exchange certificates.

The browser then

verifies that

the cer-

tificate

provided by the server

matches its DNS host

name, that it has

not

expired,

and that it is signed by a

trusted certificate

authority. The server

optionally

verifies the

identity of the browser s certificate.

If the certificates

are

approved, the browser and

server then negotiate a

master session key

using

the previously exchanged

certificates to

protect it. That key

is then

used

to encrypt all communications

until the browser

disconnects. This

kind

of

data encapsulation is known as a

tunnel.

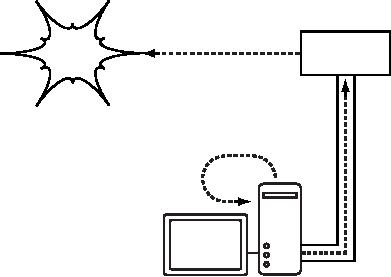

The

tunnel can

terminate

Eavesdroppers

can watch

anywhere

on the Internet.

unencrypted

wireless traffic.

Internet

Access

point

Wireless

traffic is protected

by

an encrypted tunnel.

Figure

6.4: Eavesdroppers must break

strong encryption to monitor

traffic

over an

encrypted

tunnel. The conversation

inside the tunnel is

identical to any other

unen-

crypted

conversation.

The

use of certificates

with a PKI not only

protects the communication

from

eavesdroppers,

but also prevents so-called

man-in-the-middle

(MITM)

at-

170

Chapter

6: Security & Monitoring

tacks.

In a man-in-the-middle attack, a

malicious user intercepts

all commu-

nication

between the browser and

the server. By presenting

counterfeit cer-

tificates

to both the browser and

the server, the malicious

user could carry on

two

simultaneous encrypted sessions.

Since the malicious user

knows the

secret

on both connections, it is trivial to

observe and manipulate data

pass-

ing

between the server and

the browser.

Server

Man-in-the-middle

User

Figure

6.5: The man-in-the-middle

effectively controls everything

the user sees,

and

can

record and manipulate all

traffic. Without a public key

infrastructure to verify

the

authenticity

of keys, strong encryption

alone cannot protect against

this kind of

attack.

Use

of a good PKI prevents this

kind of attack. In order to be

successful, the

malicious

user would have to present a

certificate to

the client that is

signed

by

a trusted certificate

authority. Unless a CA has

been compromised

(very

unlikely)

or the user is tricked into

accepting the forged

certificate,

then such

an

attack is not possible. This

is why it is vitally important

that users under-

stand

that ignoring warnings about

expired or improper certificates

is very

dangerous,

especially when using

wireless networks. By clicking

the "ignore"

button

when prompted by their

browser, users open

themselves up to many

potential

attacks.

SSL

is not only used for

web browsing. Insecure email

protocols such as

IMAP,

POP, and SMTP can be

secured by wrapping them in an

SSL tunnel.

Most

modern email clients support

IMAPS and POPS (secure

IMAP and

POP)

as well as SSL/TLS protected

SMTP. If your email server

does not

provide

SSL support, you can

still secure it with SSL

using a package like

Stunnel

(http://www.stunnel.org/). SSL

can be used to effectively

secure just

about

any service that runs

over TCP.

SSH

Most

people think of SSH as a

secure replacement for

telnet,

just as scp

and

sftp are

the secure counterparts of

rcp

and

ftp. But

SSH is much

more

than encrypted remote shell.

Like SSL, it uses strong

public key cryp-

tography

to verify the remote server

and encrypt data. Instead of

a PKI, it

uses

a key fingerprint

cache that is checked before

a connection is permitted.

It

can use passwords, public

keys, or other methods for

user authentication.

Many

people do not know that

SSH can also act as a

general purpose en-

crypting

tunnel, or even an encrypting

web proxy. By first

establishing an

Chapter

6: Security & Monitoring

171

SSH

connection to a trusted location

near (or even on) a

remote server, in-

secure

protocols can be protected

from eavesdropping and

attack.

While

this technique may be a bit

advanced for many users,

network archi-

tects

can use SSH to encrypt

traffic across

untrusted links, such as

wireless

point-to-point

links. Since the tools

are freely available and

run over stan-

dard

TCP, any educated user

can implement SSH

connections for them-

selves,

providing their own

end-to-end encryption without

administrator inter-

vention.

OpenSSH

(http://openssh.org/) is probably

the most popular

implementation

on

Unix-like platforms. Free implementations

such as Putty

(http://www.putty.nl/) and

WinSCP (http://winscp.net/) are

available for

Windows.

OpenSSH will also run on

Windows under the Cygwin

package

(http://www.cygwin.com/). These

examples will assume that

you are using

a

recent version of

OpenSSH.

Internet

SSH

Server

All

traffic sent from

SSH

server is

unencrypted

All

web traffic is

SSH

listens for a TCP

encrypted

by SSH

connection

on localhost

port

3128

Web

browser uses

localhost

port 3128

for

its proxy

Figure

6.6: The SSH tunnel

protects web traffic up to

the SSH server

itself.

To

establish an encrypted tunnel

from a port on the local

machine to a port

on

the remote side, use

the -L

switch.

For example, suppose you

want to

forward

web proxy traffic over an

encrypted link to the squid

server at

squid.example.net.

Forward port 3128 (the

default proxy port) using

this

command:

ssh

-fN -g -L3128:squid.example.net:3128

squid.example.net

172

Chapter

6: Security & Monitoring

The

-fN

switches

instruct ssh to fork into

the background after

connecting.

The

-g switch

allows other users on your

local segment to connect to

the lo-

cal

machine and use it for

encryption over the

untrusted link. OpenSSH

will

use

a public key for

authentication if you have

set one up, or it will

prompt

you

for your password on the

remote side. You can

then configure

your web

browser

to connect to localhost port

3128 as its web proxy

service. All web

traffic

will then be encrypted

before transmission to the

remote side.

SSH

can also act as a dynamic

SOCKS4 or SOCKS5 proxy. This

allows you

to

create an encrypting web

proxy, without the need to

set up squid. Note

that

this is not a caching proxy;

it simply encrypts all

traffic.

ssh

-fN -D 8080 remote.example.net

Configure

your web browser to use

SOCKS4 or SOCKS5 on local

port 8080,

and

away you go.

SSH

can encrypt data on any

TCP port, including ports

used for email. It

can

even

compress the data along

the way, which can

decrease latency on

low

capacity

links.

ssh

-fNCg -L110:localhost:110 -L25:localhost:25

mailhost.example.net

The

-C switch

turns on compression. You

can add as many port

forwarding

rules

as you like by specifying

the -L

switch

multiple times. Note that in

order

to

bind to a local port less

than 1024, you must

have root privileges on

the

local

machine.

These

are just a few examples of

the flexibility of

SSH. By implementing

public

keys and using the

ssh forwarding agent, you

can automate the

crea-

tion

of encrypted tunnels throughout

your wireless network, and

protect your

communications

with strong encryption and

authentication.

OpenVPN

OpenVPN

is a free, open source VPN

implementation built on SSL

encryp-

tion.

There are OpenVPN client

implementations for a wide

range of operat-

ing

systems, including Linux,

Windows 2000/XP and higher,

OpenBSD,

FreeBSD,

NetBSD, Mac OS X, and

Solaris. Being a VPN, it

encapsulates all

traffic

(including DNS and all

other protocols) in an encrypted

tunnel, not just

a

single TCP port. Most

people find it

considerably easier to understand

and

configure

than IPSEC.

OpenVPN

also has some disadvantages,

such as fairly high latency.

Some

amount

of latency is unavoidable since

all encryption/decryption is done

in

user

space, but using relatively

new computers on either end

of the tunnel

can

minimize this. While it

can use traditional shared

keys, OpenVPN

Chapter

6: Security & Monitoring

173

really

shines when used with

SSL certificates and a

certificate authority.

OpenVPN

has many advantages that

make it a good option for

providing

end-to-end

security.

Some

of these reasons

include:

ˇ

It is based on a proven, robust

encryption protocol (SSL and

RSA)

ˇ

It is relatively easy to configure

ˇ

It functions across many

different platforms

ˇ

It is well documented

ˇ

It's free and open

source.

OpenVPN

needs to connect to a single

TCP or UDP port on the

remote side.

Once

established, it can encapsulate

all data down to the

Networking layer,

or

even down to the Data-Link

layer, if your solution

requires it. You can

use it

to

create robust VPN

connections between individual

machines, or simply

use

it to connect network routers

over untrusted wireless

networks.

VPN

technology is a complex field,

and is a bit beyond the

scope of this

section

to go into more detail. It is

important to understand how

VPNs fit

into

the structure of your

network in order to provide

the best possible

pro-

tection

without opening up your

organization to unintentional

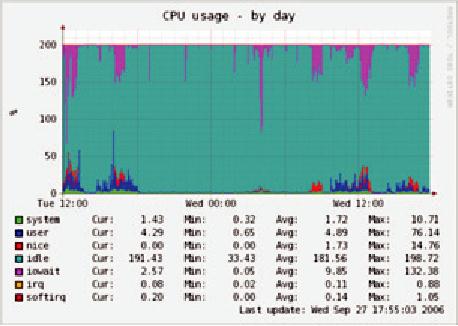

problems.

There

are many good online

resources that deal with

installing OpenVPN

on

a server and client, we

recommend this article from

Linux Journal:

http://www.linuxjournal.com/article/7949

as well as

the official HOWTO:

http://openvpn.net/howto.html

Tor

& Anonymizers

The

Internet is basically an open

network based on trust. When

you connect

to

a web server across the

Internet, your traffic passes

through many differ-

ent

routers, owned by a great

variety of institutions, corporations

and indi-

viduals.

In principle, any one of

these routers has the

ability to look closely

at

your

data, seeing the source

and destination addresses,

and quite often

also

the

actual content of the data.

Even if your data is

encrypted using a

secure

protocol,

it is possible for your

Internet provider to monitor

the amount of data

transferred,

as well as the source and

destination of that data.

Often this is

enough

to piece together a fairly

complete picture of your

activities on-line.

Privacy

and anonymity are important,

and closely linked to each

other. There

are

many valid reasons to

consider protecting your

privacy by anonymizing

your

network traffic. Suppose

you want to offer Internet

connectivity to your

local

community by setting up a number of

access points for people to

con-

nect

to. Whether you charge

them for their access or

not, there is always

the

174

Chapter

6: Security & Monitoring

risk

that people use the

network for something that

is not legal in your

coun-

try

or region. You could plead

with the legal system

that this particular

illegal

action

was not performed by

yourself, but could have

been performed by

anyone

connecting to your network.

The problem is neatly

sidestepped if it

were

technically infeasible to determine

where your traffic was

actually

headed.

And what about on-line

censorship? Publishing web

pages anony-

mously

may also be necessary to

avoid government

censorship.

There

are tools that allow

you to anonymize your

traffic in

relatively easy

ways.

The combination of Tor

(http://www.torproject.org/) and

Privoxy

(http://www.privoxy.org/) is a

powerful way to run a local

proxy server that

will

pass your Internet

traffic through a

number of servers all across

the net,

making

it very difficult to

follow the trail of

information. Tor can be run

on a

local

PC, under Microsoft Windows,

Mac OSX, Linux and a

variety of BSD's,

where

it anonymizes traffic from

the browser on that

particular machine.

Tor

and

Privoxy can also be

installed on a gateway server, or

even a small em-

bedded

access point (such as a

Linksys WRT54G) where they

provides ano-

nymity

to all network users

automatically.

Tor

works by repeatedly bouncing

your TCP connections across

a number of

servers

spread throughout the

Internet, and by wrapping

routing information

in

a number of encrypted layers

(hence the term

onion

routing), that

get

peeled

off as the packet moves

across the network. This

means that, at any

given

point in the network, the

source and destination

addresses cannot be

linked

together. This makes

traffic analysis

extremely difficult.

The

need for the Privoxy

privacy proxy in connection

with Tor is due to

the

fact

that name server queries

(DNS queries) in most cases

are not passed

through

the proxy server, and

someone analyzing your

traffic would

easily be

able

to see that you were

trying to reach a specific site

(say google.com) by

the

fact that you sent a

DNS query to translate

google.com to the

appropriate

IP

address. Privoxy connects to

Tor as a SOCKS4a proxy,

which uses host-

names

(not IP addresses) to get

your packets to the intended

destination.

In

other words, using Privoxy

with Tor is a simple and

effective way to pre-

vent

traffic analysis

from linking your IP address

with the services you

use

online.

Combined with secure,

encrypted protocols (such as

those we have

seen

in this chapter), Tor and

Privoxy provide a high level

of anonymity on

the

Internet.

Network

Monitoring

Network

monitoring is the use of

logging and analysis tools

to accurately de-

termine

traffic flows,

utilization, and other

performance indicators on a

net-

work.

Good monitoring tools give

you both hard numbers

and graphical ag-

Chapter

6: Security & Monitoring

175

gregate

representations of the state of

the network. This helps

you to visu-

alize

precisely what is happening, so

you know where adjustments

may be

needed.

These tools can help

you answer critical

questions, such as:

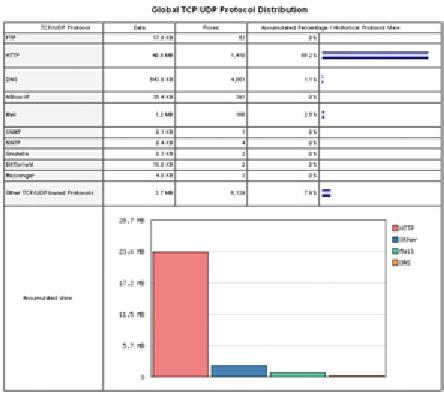

ˇ

What are the most

popular services used on the

network?

ˇ

Who are the heaviest

network users?

ˇ

What other wireless channels

are in use in my

area?

ˇ

Are users installing

wireless access points on my

private wired

network?

ˇ

At what time of the day is

the network most

utilized?

ˇ

What sites do your users

frequent?

ˇ

Is the amount of inbound or

outbound traffic close to

our available network

capacity?

ˇ

Are there indications of an

unusual network situation

that is consuming

bandwidth

or causing other

problems?

ˇ

Is our Internet Service

Provider (ISP) providing the

level of service that

we

are

paying for? This should be

answered in terms of available

bandwidth,

packet

loss, latency, and overall

availability.

And

perhaps the most important

question of all:

ˇ

Do the observed traffic patterns

fit our

expectations?

Let's

look at how a typical system

administrator can make good

use of net-

work

monitoring tools.

An

effective network monitoring

example

For

the purposes of example,

let's assume that we are in

charge of a network

that

has been running for

three months. It consists of 50

computers and three

servers:

email, web, and proxy

servers. While initially

things are going

well,

users

begin to complain of slow

network speeds and an

increase in spam

emails.

As time goes on,

computer performance slows to a

crawl (even

when

not using the network),

causing considerable frustration in

your users.

With

frequent complaints and very

low computer usage, the

Board is ques-

tioning

the need for so much

network hardware. The Board

also wants evi-

dence

that the bandwidth they

are paying for is actually

being used. As the

network

administrator, you are on

the receiving end of these

complaints. How

can

you diagnose the sudden

drop in network and computer

performance

and

also justify the network

hardware and bandwidth

costs?

176

Chapter

6: Security & Monitoring

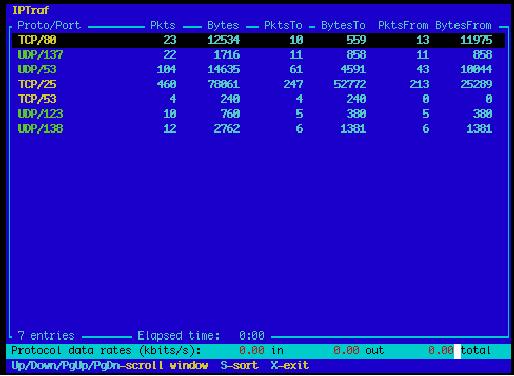

Monitoring

the LAN (local

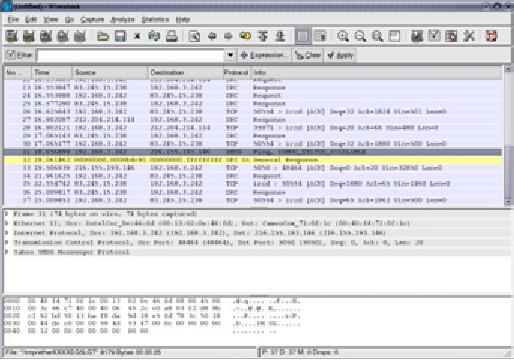

traffic)

To

get an idea of exactly what

is causing the slow down,

you should begin by

looking

at traffic on the

local LAN. There are

several advantages to

monitor-

ing

local traffic:

ˇ

Troubleshooting is greatly

simplified.

ˇ

Viruses can be detected and

eliminated.

ˇ

Malicious users can be

detected and dealt

with.

ˇ

Network hardware and

resources can be justified with

real statistics.

Assume

that all of the switches

support the Simple

Network Management

Protocol

(SNMP). SNMP is an

application-layer protocol designed to

facili-

tate

the exchange of management

information between network

devices. By

assigning

an IP address to each switch,

you are able to monitor

all the inter-

faces

on that switch, observing

the entire network from a

single point. This is

much

easier than enabling SNMP on

all computers in a

network.

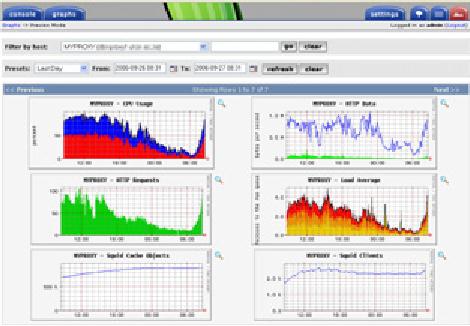

By

using a free tool such as

MRTG (see Page

190), you

can monitor each

port

on the switch and present

data graphically, as an aggregate

average

over

time. The graphs are

accessible from the web, so

you are able to

view

the

graphs from any machine at

anytime.

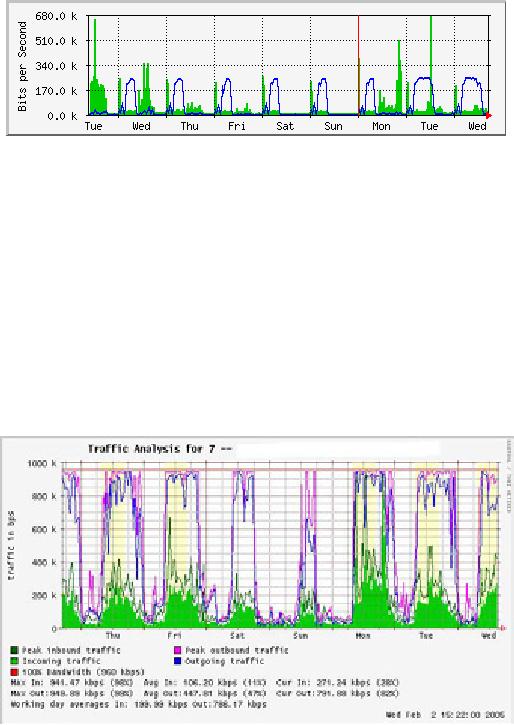

With

MRTG monitoring in place, it

becomes obvious that the

internal LAN is

swamped

with far more

traffic than

the Internet connection can

support, even

when

the lab is unoccupied. This

is a pretty clear indication

that some of the

computers

are infested with a network

virus. After installing good

anti-virus

and

anti-spyware software on all of

the machines, the internal

LAN traffic

settles

down to expected levels.

The machines run much

more quickly,

spam

emails are reduced, and

the users' morale quickly

improves.

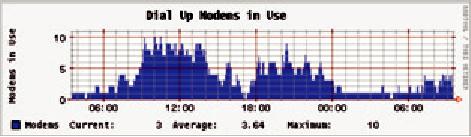

Monitoring

the WAN (external

traffic)

In

addition to watching the

traffic on the

internal LAN, you need to

demon-

strate

that the bandwidth the

organization is paying for is

actually what they

are

getting from their ISP.

You can achieve this by

monitoring external

traf-

fic.

External

traffic is generally

classified as anything

sent over a Wide

Area

Network

(WAN). Anything

received from (or sent

to) a network other

than

your

internal LAN also

qualifies as external

traffic. The

advantages of moni-

toring

external traffic

include:

ˇ

Internet bandwidth costs are

justified by showing

actual usage, and

whether

that usage agrees with

your ISP's bandwidth

charges.

Chapter

6: Security & Monitoring

177

ˇ

Future capacity needs are

estimated by watching usage

trends and pre-

dicting

likely growth

patterns.

ˇ

Intruders from the Internet

are detected and filtered

before they can

cause

problems.

Monitoring

this traffic is easily

done with the use of

MRTG on an SNMP en-

abled

device, such as a router. If

your router does not

support SNMP, then

you

can add a switch between

your router and your

ISP connection, and

monitor

the port traffic just as

you would with an internal

LAN.

Detecting

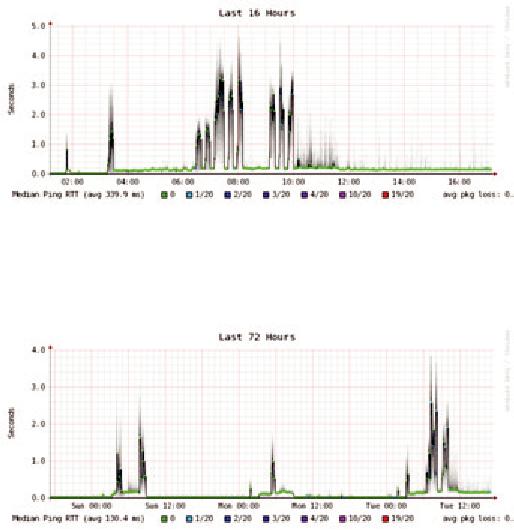

Network Outages

With

monitoring tools in place,

you now have an accurate

measurement of

how

much bandwidth the

organization is using. This

measurement should

agree

with your ISP's bandwidth

charges. It can also

indicate the actual

throughput

of your connection if you

are using close to your

available capac-

ity

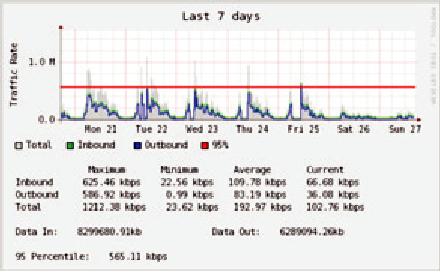

at peak times. A "flat top"

graph is a fairly clear

indication that you

are

operating

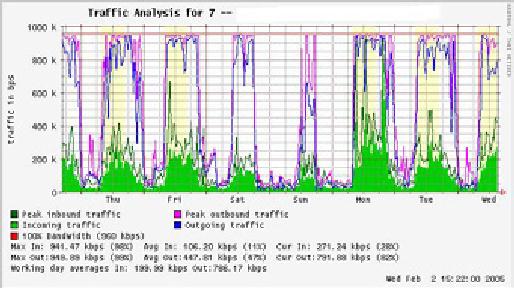

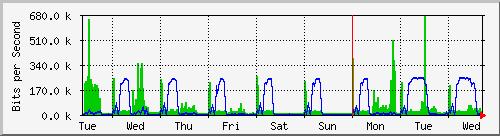

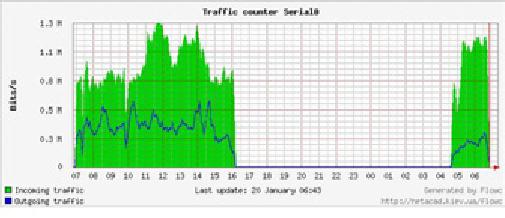

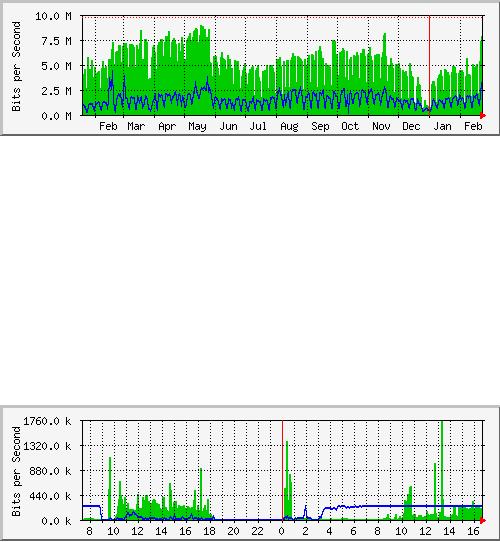

at full capacity. Figure

6.7 shows

flat tops in

peak outbound traffic

in

the middle of every day

except Sunday.

It

is clear that your current

Internet connection is overutilized at

peak times,

causing

network lag. After

presenting this information to

the Board, you

can

make

a plan for further

optimizing your existing

connection (by

upgrading

your

proxy server and using

other techniques in this

book) and estimate

how

soon

you will need to upgrade

your connection to keep up

with the demand.

This

is also an excellent time to

review your operational

policy with the

Board,

and discuss ways to bring

actual usage in line with

that policy.

Figure

6.7: A graph with a "flat

top" is one indication of

overutilization.

178

Chapter

6: Security & Monitoring

Later

in the week, you receive an

emergency phone call in the

evening.

Apparently,

no one in the lab can

browse the web or send

email. You rush to

the

lab and hastily reboot

the proxy server, with no

results. Browsing and

email

are still broken. You

then reboot the router,

but there is still no

success.

You

continue eliminating the

possible fault areas one by

one until you

realize

that

the network switch is off -

a loose power cable is to

blame. After applying

power,

the network comes to life

again.

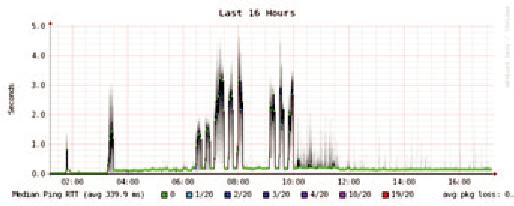

How

can you troubleshoot such an

outage without such time

consuming trial

and

error? Is it possible to be

notified of outages

as they occur, rather

than

waiting

for a user to complain? One

way to do this is to use a

program such

as

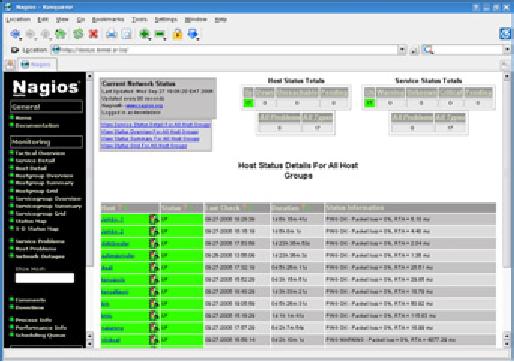

Nagios

that

continually polls network

devices and notifies you of

outages.

Nagios

will report on the

availability of various machines

and services, and

will

alert you to machines that

have gone down. In addition

to displaying the

network

status graphically on a web

page, it will send

notifications

via SMS

or

email, alerting you

immediately when problems

arise.

With

good monitoring tools in

place, you will be able to

justify the cost of

equipment

and bandwidth by effectively

demonstrating how it is being

used

by

the organization. You are

notified

automatically when problems

arise, and

you

have historical statistics of

how the network devices

are performing. You

can

check the current

performance against this

history to find unusual

behav-

ior,

and head off problems

before they become critical.

When problems do

come

up, it is simple to determine

the source and nature of

the problem.

Your

job is easier, the Board is

satisfied,

and your users are

much happier.

Monitoring

your network

Managing

a network without monitoring is

similar to driving a vehicle

without

a

speedometer or a fuel gauge,

with your eyes closed.

How do you know

how

fast you are going? Is

the car consuming fuel as

efficiently

as promised

by

the dealers? If you do an

engine overhaul several

months later, is the

car

any

faster or more efficient

than it was before?

Similarly,

how can you pay

for an electricity or water

bill without seeing

your

monthly

usage from a meter? You

must have an account of your

network

bandwidth

utilization in order to justify

the cost of services and

hardware pur-

chases,

and to account for usage

trends.

There

are several benefits to

implementing a good monitoring

system for

your

network:

1.

Network

budget and resources are

justified.

Good

monitoring tools

can

demonstrate without a doubt

that the network

infrastructure (band-

width,

hardware, and software) is

suitable and able to handle

the re-

quirements

of network users.

Chapter

6: Security & Monitoring

179

2.

Network

intruders are detected and filtered.

By

watching your net-

work

traffic, you

can detect attackers and

prevent access to critical

inter-

nal

servers and services.

3.

Network

viruses are easily detected.

You

can be alerted to the

pres-

ence

of network viruses, and take

appropriate action before

they con-

sume

Internet bandwidth and

destabilize your

network

4.

Troubleshooting

of network problems is greatly

simplified.

Rather

than

attempting "trial and error"

to debug network problems,

you can be

instantly

notified of

specific problems.

Some kinds of problems can

even

be

repaired automatically.

5.

Network

performance can be highly optimized.

Without

effective

monitoring,

it is impossible to fine tune

your devices and protocols

to

achieve

the best possible

performance.

6.

Capacity

planning is much easier. With

solid historical

performance

records,

you do not have to "guess"

how much bandwidth you

will need

as

your network grows.

7.

Proper network usage

can be enforced. When

bandwidth is a scarce

resource,

the only way to be fair to

all users is to ensure that

the network

is

being used for its

intended purpose.

Fortunately,

network monitoring does not

need to be an expensive

undertak-

ing.

There are many freely

available open source tools

that will show

you

exactly

what is happening on your

network in considerable detail.

This sec-

tion

will help you identify

many invaluable tools and

how best to use

them.

The

dedicated monitoring

server

While

monitoring services can be

added to an existing network

server, it is of-

ten

desirable to dedicate one

machine (or more, if necessary) to

network moni-

toring.

Some applications (such as

ntop) require

considerable resources to

run,

particularly on a busy network.

But most logging and

monitoring programs

have

modest RAM and storage

requirements, typically with

little CPU power

required.

Since open source operating

systems (such as Linux or

BSD) make

very

efficient use of hardware

resources, this makes it

possible to build a

very

capable

monitoring server from

recycled PC parts. There is

usually no need

to

purchase a brand new server

to relegate to monitoring

duties.

The

exception to this rule is in

very large installations. If

your network in-

cludes

more than a few hundred

nodes, or if you consume

more than

50

Mbps of

Internet bandwidth, you will

likely need to split up

monitoring du-

ties

between a few dedicated

machines. This depends

largely on exactly

what

you want to monitor. If you

are attempting to account

for all services

accessed

per MAC address, this

will consume considerably

more resources

180

Chapter

6: Security & Monitoring

than

simply measuring network flows

on a switch port. But for

the majority of

installations,

a single dedicated monitoring

machine is usually

enough.

While

consolidating monitoring services to a

single machine will

streamline

administration

and upgrades, it can also

ensure better ongoing

monitoring.

For

example, if you install

monitoring services on a web

server, and that

web

server

develops problems, then your

network may not be monitored

until the

problem

is resolved.

To

a network administrator, the

data collected about network

performance is

nearly

as important as the network

itself. Your monitoring

should be robust

and

protected from service

outages as well as possible.

Without network

statistics,

you are effectively blind to

problems with the

network.

Where

does the server fit in my

network?

If

you are only interested in

collecting network flow

statistics from a

router,

you

can do this from just

about anywhere on the LAN.

This provides simple

feedback

about utilization, but

cannot give you

comprehensive details

about

usage patterns. Figure

6.8 shows a

typical MRTG graph

generated

from

the Internet router.

While the inbound and

outbound utilization

are

clear,

there is no detail about

which computers, users, or

protocols are us-

ing

bandwidth.

Figure

6.8: Polling the edge

router can show you

the overall network

utilization, but

you

cannot break the data down

further into machines,

services, and

users.

For

more detail, the dedicated

monitoring server must have

access to eve-

rything

that needs to be watched.

Typically, this means it

must have ac-

cess

to the entire network. To

monitor a WAN connection, such as

the

Internet

link to your ISP, the

monitoring server must be

able to see the

traf-

fic

passing through the edge

router. To monitor a LAN,

the monitoring

server

is typically connected to a

monitor

port on the

switch. If multiple

switches

are used in an installation,

the monitoring server may

need a con-

nection