|

PATTERN RECOGNITION (CONTINUED):Neural Networks, Patterns of connections |

| << ATTENTION & PATTERN RECOGNITION:Word Superiority Effect |

| PATTERN RECOGNITION (CONTINUED):Effects of Sentence Context >> |

Cognitive

Psychology PSY 504

VU

Lesson

21

PATTERN

RECOGNITION (CONTINUED)

Neural

Networks

This

model is also called PDP's

or Parallel Distributed Processing.

McClelland and

Rumelhart

(1981)

made a pattern recognition

network. It solves the

paradox of bottom-up versus

top-down.

McClelland

and Rumelhart implemented

this network to model our

use of word structure

to

facilitate

recognition of individual letters. In

this model, individual

features are combined to

form

letters

and individual letters are

combined to form words. This

is connectionist model. It

depends

heavily

on excitatory and inhibitory

activation process. Activation

spreads from the features

to

excite

the letters and form

the letters to excite the

words. Alternative letters

and words inhibit

each

other. Activation can also

spread down from the

words to excite the

component letters. In

this

way a word can support

the activation of a letter

and hence promote its

recognition. In such a

system,

activation will tend to

accumulate at one word and

it will repress the

activations of other

words

through inhibition. The

dominant word will support

the activation of its

component letters,

and

these letters will repress

the activation of alternatives

letters. The word

superiority effect is

due

to the support a word gives

to its component letters.

The computation proposed

by

McClelland

and Rumelhart's interactive

activation model is extremely

complex, as is the

computation

of any model that stimulates

neural processing. This

process helps us in

understanding

how neural processing

underlies pattern recognition.

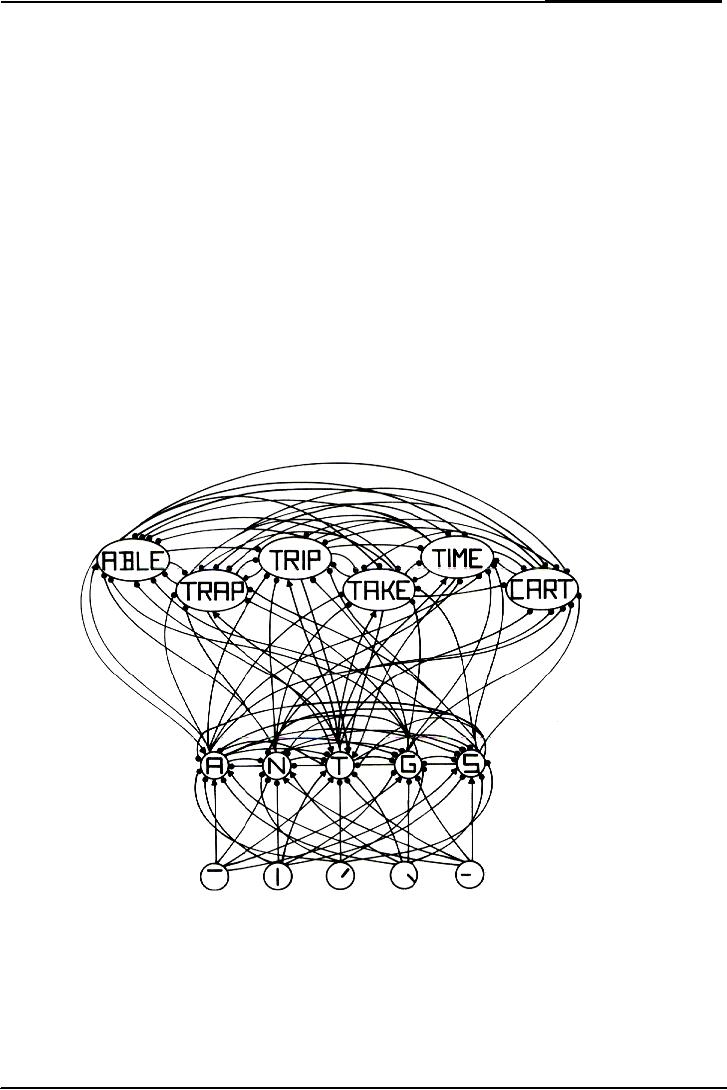

The figure of this model

is

given

below.

This

is the part of pattern-recognition

network proposed by McClelland

and Rumelhart to

perform

word

recognition by performing calculation on

neural activation values.

Connections with

arrowheads

indicate excitatory connections

from the source to the

head. Connections

with

rounded

heads indicate inhibitory

connections from the source

to the head. This net is

making

network.

65

Cognitive

Psychology PSY 504

VU

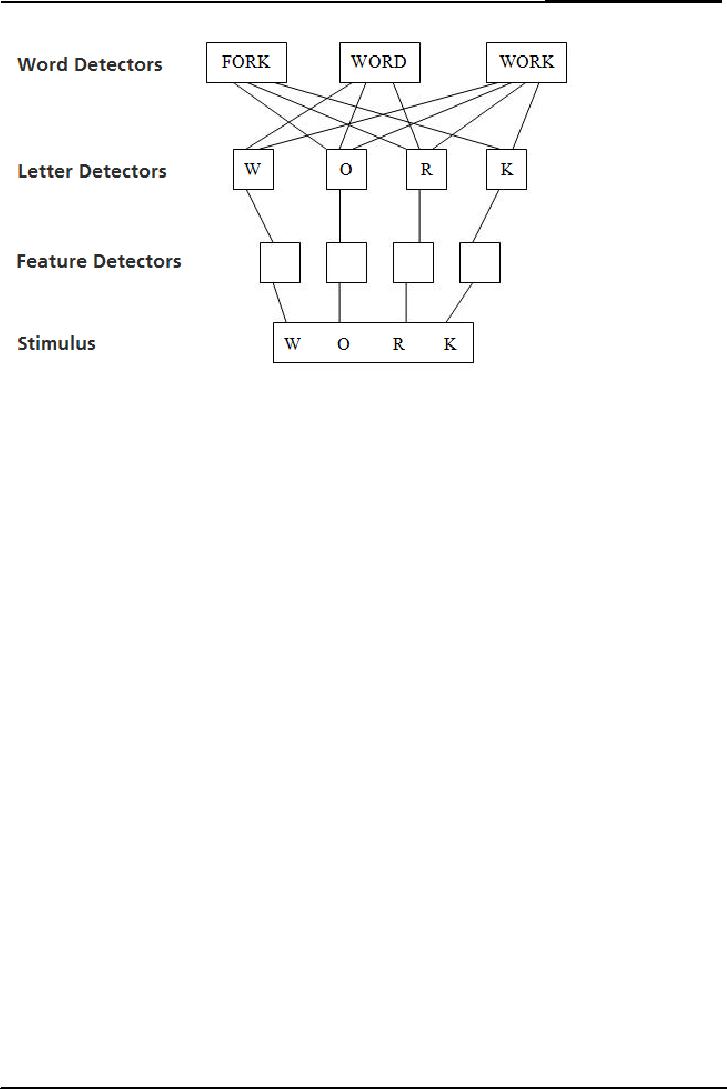

This

is simpler version of neural

network. This diagram is

showing a word WORK. At one

level

there

is feature detector. Feature

detectors are being not

showed. At another level

there are letter

detectors.

We recognize W because of features. O R

and K are recognized. All

words activate

because

of letters. Four words of

WORK are activated. These

three words are competitors

in

word

recognition. WORK is recognizing by

four letters. This is

parallel distributor

processing

model.

This is also called neural

network model of pattern

recognition model. This is

called neural

network

because there is an abstract

concept or quantity that

called nodes.

Neural

Networks

Neural

networks is consisted on

Nodes

Links

Excitatory

Inhibitory

Weights

Learning

consists of re-adjustment of

weights

Nodes

Nodes

are a set of processing

units. Nodes should not be

confused with neurons. Nodes

are hard

ware

level description. Nodes are

represented by features, letters

and words in the

interactive

activation

model. They can acquire

different levels of activation.

All boxes in above diagram

are

nodes.

Lines are links. Nodes

are connected through these

lines.

Patterns

of connections

Nodes

are connected to each other

by excitatory or inhibitory connections

that differ in

strength.

Another

important concept is activation

rules. These specify how a

node combines its

excitatory

and

inhibitory inputs with its

current state of

activation.

66

Cognitive

Psychology PSY 504

VU

Excitatory

connections

These

are those connections that

make other nodes active.

Those nodes are

connected

with

excitatory connections they

are active or

charged.

Inhibitory

connections

Those

connections that make other

nodes relax and switch

off.

Because

of these connections the

neural network

exists.

State

of Activation

Nodes

can be activated to various

degrees. We become conscious of

nodes that are

activated

above

a threshold level of conscious

awareness. We become aware of

letter K in the word

WORK

when it receives enough

excitatory influences from

feature and word

levels.

A

Learning Rule

Learning

generally occurs by changing

the weights of the

excitatory and inhibitory

connections

between

the nodes. The Learning

rule specifies how to make

these changes in the

weights.

Initial

weights

Re-adjustment

of weights

PDP

and learning

The

Learning component is the

most important feature of a

neural network model because

it

enables

the network to improve its

performance. In a lab in California a

computer learns how

to

speak

by reading and re-reading

simple English sentences

improving from its own

mistakes.

PDP

and its

significance

Parallel

processing models have

improved computer functioning.

That has made

super

computers.

Super computers are called

parallel computers. Multiple

processors that

communicate

with each other work

faster than serial

processing computers. The

paradoxes are

resolved.

Like forests are seen at

the same time as the

trees. Words are seen at

the same time

as

the letters. Context helps

in object perception; object

perception helps in perception of

context.

67

Table of Contents:

- INTRODUCTION:Historical Background

- THE INFORMATION PROCESSING APPROACH

- COGNITIVE NEUROPSYCHOLOGY:Brains of Dead People, The Neuron

- COGNITIVE NEUROPSYCHOLOGY (CONTINUED):The Eye, The visual pathway

- COGNITIVE PSYCHOLOGY (CONTINUED):Hubel & Wiesel, Sensory Memory

- VISUAL SENSORY MEMORY EXPERIMENTS (CONTINUED):Psychological Time

- ATTENTION:Single-mindedness, In Shadowing Paradigm, Attention and meaning

- ATTENTION (continued):Implications, Treisman’s Model, Norman’s Model

- ATTENTION (continued):Capacity Models, Arousal, Multimode Theory

- ATTENTION:Subsidiary Task, Capacity Theory, Reaction Time & Accuracy, Implications

- RECAP OF LAST LESSONS:AUTOMATICITY, Automatic Processing

- AUTOMATICITY (continued):Experiment, Implications, Task interference

- AUTOMATICITY (continued):Predicting flight performance, Thought suppression

- PATTERN RECOGNITION:Template Matching Models, Human flexibility

- PATTERN RECOGNITION:Implications, Phonemes, Voicing, Place of articulation

- PATTERN RECOGNITION (continued):Adaptation paradigm

- PATTERN RECOGNITION (continued):Gestalt Theory of Perception

- PATTERN RECOGNITION (continued):Queen Elizabeth’s vase, Palmer (1977)

- OBJECT PERCEPTION (continued):Segmentation, Recognition of object

- ATTENTION & PATTERN RECOGNITION:Word Superiority Effect

- PATTERN RECOGNITION (CONTINUED):Neural Networks, Patterns of connections

- PATTERN RECOGNITION (CONTINUED):Effects of Sentence Context

- MEMORY:Short Term Working Memory, Atkinson & Shiffrin Model

- MEMORY:Rate of forgetting, Size of memory set

- Memory:Activation in a network, Magic number 7, Chunking

- Memory:Chunking, Individual differences in chunking

- MEMORY:THE NATURE OF FORGETTING, Release from PI, Central Executive

- Memory:Atkinson & Shiffrin Model, Long Term Memory, Different kinds of LTM

- Memory:Spread of Activation, Associative Priming, Implications, More Priming

- Memory:Interference, The Critical Assumption, Limited capacity

- Memory:Interference, Historical Memories, Recall versus Recognition

- Memory:Are forgotten memories lost forever?

- Memory:Recognition of lost memories, Representation of knowledge

- Memory:Benefits of Categorization, Levels of Categories

- Memory:Prototype, Rosch and Colleagues, Experiments of Stephen Read

- Memory:Schema Theory, A European Solution, Generalization hierarchies

- Memory:Superset Schemas, Part hierarchy, Slots Have More Schemas

- MEMORY:Representation of knowledge (continued), Memory for stories

- Memory:Representation of knowledge, PQ4R Method, Elaboration

- Memory:Study Methods, Analyze Story Structure, Use Multiple Modalities

- Memory:Mental Imagery, More evidence, Kosslyn yet again, Image Comparison

- Mental Imagery:Eidetic Imagery, Eidetic Psychotherapy, Hot and cold imagery

- Language and thought:Productivity & Regularity, Linguistic Intuition

- Cognitive development:Assimilation, Accommodation, Stage Theory

- Cognitive Development:Gender Identity, Learning Mathematics, Sensory Memory