|

Lecture-21

Introduction

to Data Quality Management

(DQM)

Data is

the "fuel" on which a Data

Warehouse (DWH) runs. One of

the main reasons for

the

failure of

DWH deployments is data quality or

lack of it. Unfortunately the

problems of data

quality

are usually underestimated, leading to

bad decisions, traumatic

results and expensive

fixes.

There

can be a whole course on

data quality. The goal of this

lecture is to expose the

reader to the

data

quality issues, and to

motivate them to understand more

about quality management

and

quality control.

This will enable the

reader to relate quality

management to the DWH,

because

usually

quality control relates to manufacturing

than Data

Warehousing.

Consider the

analogy of a car. You may

have the best car,

the bes t road conditions

and the best

driver on

the seat. But if the

fuel is contaminated, or is not of the

right octane, either the car

will

not run, or

will run, but not give

the right

performance.

What is

Quality? Informally

Some

things are better than

others i.e. they are of

higher quality. How much "better" is

better?

Is the

right item the best

item to purchase? How about

after the purchase?

What is quality

of service? The bank

example

The

best way to look at data

quality is to look at what quality

means in the general

marketplace

and

then translate what quality means

for data. As consumers,

human beings consciously

or

subconsciously

judge the "quality" of things

during their life -time. A

conscious application of

quality

measurement is when a person compares

products in a store and

chooses one of them

as

the

"right" product. "Right"

here means selecting the

product that best meets

his/her overall

needs. Note

that the product bought may not

necessarily have the best

features in every

category.

After

pur chase, people establish

quality based on a product-price comparison

i.e. whether that

product

for its price met their

expectations as per its

intended use?

What is

Quality? Formally

Quality is

conformance to requirements"

P. Crosby,

"Quality is Free"

1979

Degree of

excellence"

Webster's

Third New International Dictionary

Conformance to

requirements does not imply simply

conformation to written

specifications.

Customers'

requirements may be formal

and written, or informal mental

expectations of meeting

their purpose or

satisfying their needs.

166

If a product

meets formally defined "requirement

specifications," yet fails to be a

quality product

from

the customers' pe rspective,

this means the requirements

were defective.

If an application is

designed and built to meet

the

functional requirements signed

off by the

business

sponsors, and during formal

testing the business

sponsors reject the application as

not

meeting

their needs, what does that

mean? Either the requirements

specification or the analysis

and

design process is defective.

What is

Quality? Examples from Auto

Industry

Quality

means meeting customer's

needs, not necessarily exceeding

them.

Quality means

improving things customers

care

about, because

that makes their lives

easier and

more

comfortable.

Why example

from auto-industry?

The

luxury automobile producer Rolls Royce

went bankrupt in the early 1980s.

Analysis revealed

that,

among other things , Rolls Royce was

improving components that

the luxury automobile

customers

felt were irrelevant and

polishing parts they did not

care about. This drove

the price

beyond what

the luxury automobile

customer felt was value

for their money.

On the other ha

nd, when Lexus decided to

make its first major

redesign of its highly rated

L8 400

luxury

automobile, company representatives asked

for help from their existing

customers. They

even

visited the homes of a

variety of L8 400 owners to observe home furnishings,

what kind of

leather

they had on their brief

cases, and other minute details to

get an idea of their

customer's

subconscious

expectations.

What is

Data Quality?

What is

Data?

All

data is an abstraction of something

real

Intrinsic

Data Quality

Electronic

reproduction of reality.

Realistic

Data Quality

Degree of

utility or value of data to

business

Figure-21.1:

What is Data Quality?

What is

data?

Understand

that all data is an

abstraction or a representation of

something real. Data is

an

equivalent

reproduction of something real. Figure

21.1 shows an employee data.

Data in a

167

database or

data warehouse has no actual

value; it only has potential

value. Data has a real

value

only

when someone uses it to do

something useful; for example, to

send the utility bill

to

customer at

the correct address for

timely payment, or to successfully go

with the change in a

product

based on the knowledge of customer

likes/dislikes.

What Is

Data Quality?

There are

two significant definitions of dat a quality.

One is its intrinsic quality, and

the other is its

realistic

quality. Intrinsic data quality is

the correctness or accuracy of

data. Realistic data

quality

is the

value that correct data

has in supporting the work of

the business or enterprise.

Data that

does not help or

enable the enterprise to

accomplish its mission has

no quality, no matter how

accurate it

is.

Intrinsic

Data Quality

Intrinsic

data quality, simply stated, is data

accuracy. Intrinsic data

quality is the degree to

which

data

accurately reflects the

real-world object that the

data represents. If all

facts that an

organization

needs to know about an

entity are accurate, that

data has intrinsic quality -it is

an

electronic

reproduction of reality. Intrinsic data quality

means that data is

correct.

Realistic

Data Quality

Realistic data

quality is the degree of

utility and value the

data has to support the

enterprise

processes

that enable accomplishing

enterprise objectives. Fundamentally,

realistic data quality is

the

degree of customer satisfaction

generated by the knowledge workers who

use it to do their

jobs.

Realistic data quality is

the degree to which data

enables knowledge workers to meet

enterprise

objectives efficiently and

effectively.

Data

Quality & Organizations

Intelligent

Learning Organization:

High -quality

information is an open,

shared resource

with value-adding

processes.

The

dysfunctional learning organization: Low -quality

data is a proprietary

resource

with cost-

adding

processes.

The

intelligent learning organ ization is one

that maximizes both its

experience and its

information

resources in

the learning process. The intelligent

learning organization shares information

openly

across

the enterprise in a way that

maximizes the throughput of the entire

organization . There are

no procedural

walls, and the data users

capture data and retrieve

makes the business to

grow.

In the

Information Age, the dysfunctional

learning organization is at a distinct

disadvantage. The

term

dysfunctional means "impaired or abnormal

functioning."

168

Orr's

Laws of Data Quality

Law #1 - "Data

that is not used cannot be

correct!"

Law #2 - "Data

quality is a function of its use,

not its collection!"

Law #3 - "Data

will be no better than its

most stringent use!"

Law #4 - "Data

quality problems increase

with the age of the

system!"

Law #5

"The less likely something

is to occur, the more

traumatic it will be when it

happens!"

We will

only know about the

quality of the data (good or

bad) once we start using it. IF

the data

has

never been used, then it

would be na�ve and self

deceiving to assume that it is of

the right

quality. A realistic

approach would be to assume

that it is not of the right quality, IF

it ha snot

been

used.

As the

data is continued to be used, its

quality issues come up and

as a consequence its quality

gets

improved. A good analogy could be

that of a machine, a well

oiled machine which is

being

used is

likely to continue to work as

compared to a large machine that

has not been used for

a

long

time.

The

third law kind of supports

or goes with the second

law i.e. the more stringent

use of the data

is,

the more likely that the

quality issues that crop up

will be identified and

fixed. Consider the

analogy of a

map. A street map given in

an atlas is fine as long as using it you

can find the

major

shopping

areas, however, you may be

surprised that you may not be

able to find your house

using

that

map, because the map

was not meant to handle the

stringent use of locating your

house.

Law-4

can have two possible exp

lanations. One is rather obvious

i.e. the shelf life of

storage

medium which is

very low for diskettes,

comparatively better for magnetic

tapes and very

high

for

CDs. Depending on which medium was

used to store the data

will dictate the data

quality. The

other possibility is

the meta data or people who

are aware of the data

anomalies but leave

the

organization

over a period of time by retiring or

changing jobs or die.

Total

Quality Management (TQM)

Philosophy of

involving all for systematic

and continuous improvement.

It is customer

oriented. Why?

TQM

incorporates the concept of product

quality, process control, quality

assurance, and

quality

improvement.

Quality

assurance is NOT Quality

improvement

TQM approach is

advocating the involvement of all

employees in the continuous

improvement

process,

the ultimate goal being the customer

satisfaction.

The

TQM philosophy of management is

customer-oriented. All members of a

total quality

management

(control) organization strive to

systematically manage the improvement of

the

169

organization through

the ongoing participation of all

employees in problem solving

efforts across

functional

and hierarchical boundaries.

Quality

assurance is a system of activities that

assures conformance of product to pre

-established

requirements.

Quality improvement is making

all efforts to increase

effectiveness and efficiency in

meeting

accepted

customer expectations.

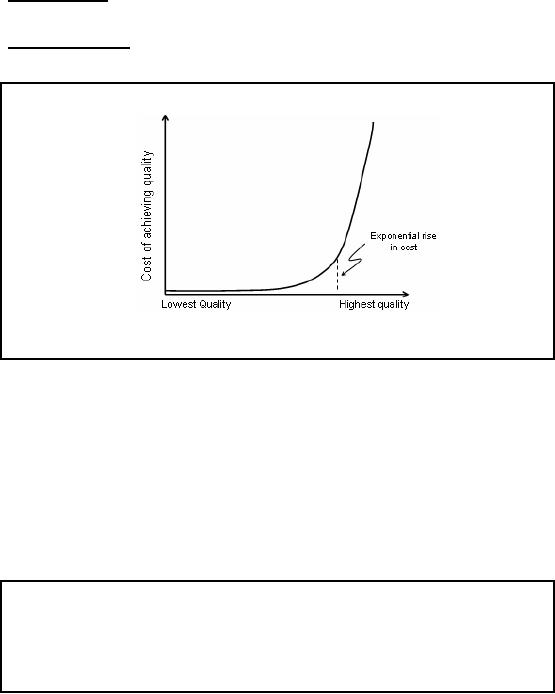

Co$t of

fixing data

quality

n Defect minimization is

economical.

n

Defect elimination is

very very expensive

Figure-21.2:

Co$t of fixing data

quality

One

can ask for the

stars i.e. perfect data quality,

only if there is no associated

cost, in such a case

this

may be a perfectly legitimate requirement.

However, in real world, such a

requirement is

NOT

realistic. Why? If you give me an

infinite amount of money, I

will personally look at

each

and

every record and data

element. But this is not

realistic; you don't have

enough money to pay

for

that. Even if you had

the money, it will take an

inordinate amount of time to check each

and

every

record. By the time I am finished

checking/fixing data, trends may have

changed, and the

question

that needed the data

may no longer be interesting

any more.

Note

that as the data quality is

pushed beyond a certain

limit, law of diminishing

returns sets in

i.e.

increasing the input does

not results in corresponding increase in

output, actually the

output

starts to go

down in this the

cost-quality ratio, as shown in Figure

21.2.

Co$t of

Data Quality

Defects

�

Controllable

Costs

o Recurring costs

for analyzing, correcting,

and preventing data

errors

�

Resultant

Costs

o Internal

and external failure costs

of business opportunities

missed.

�

Equipment &

Training Costs

The

costs are immense.

Inaccurate data will lead to

bad decisions, and bad

decisions can never

be

profitable.

Unless the fingers of the

CEO are on the pulse of the

business, that business can

not

170

prosper.

The pulse of the business is

access to reliable data,

without which a business can

not be

managed.

Direct

costs of data quality to a data intensive

organization include the

following:

1.

Controllable costs: If possible,

compare two or more

alternatives for improving

data quality.

Estimate

the controllable, equipment, and

training costs for each

alternative. Include an estimate

of labor

hours devoted to prevent, appraise, and

correct problems.

2.

Resultant costs: Missing

business opportunities. Mis

-communicating within the business

or

with

end customers and other

information stakeholders. Sometimes

resultant costs and

indirect

costs

are more difficult to quantify.

Assess these costs wherever

possible to adequately

measure

the

impacts of poor data quality.

For example, the inability

to match payroll records to

the official

employment

records can cost millions in

payroll overpayments to retirees,

personnel on leave

without

pay status, and "ghost"

employees. Inability to correlate

purchase orders to invoices

may

be a major

problem in unmatched disbursements.

Resultant costs, such as

payroll overpayments

and

unmatched disbursements, may be

significant enough to warrant extensive

changes in

processes,

systems, policy and

procedure, and information

system data designs.

3.

Equipment and training costs:

Costs

for data quality tools,

ancillary hardware and software,

and

training required to prevent, appraise,

and correct data quality

problems.

Where

data quality is

critical?

�

Almost

everywhere, some

examples:

�

Marketing

communications.

�

Customer

matching.

�

Retail

house-holding.

�

Combining

MIS systems after

acquisition.

One of

the reasons for the

lack of planning to address

data quality issues is that

operational

system

people don't sufficiently consider

the business impact of bad

data. Basically their goal is

to increase

the number of (say) claims

processed per hour. T o put it more

positively, they must

better

appreciate the opportunities of DWH

applications that are

critically dependent on

data

quality.

Following is a sampler of some

CRM -based

applications.

Marketing

Communications : If you want to

understand who your

customers are, and if

you

want to

communicate effectively with

them on the phone, mail

and via email, you

must have an

extraordinarily

clean customer list. You

destroy your credibility by

using absurd or

misspelled

addresses or

names or by sending multipl e

letters to the same person.

Even worse, if the

address

is invalid in

some way, the letter

never reaches the intending

recipient.

Customer

Matching : You want to

find the same customer when

the customer buys a second

or a

third

product from you. Customer

matching is a major issue in banking

and healthcare, where

separate

customer (or patient)

encounters are often listed

separately. The average bank

has great

difficulty

listing all of the separate

accounts of a given individual, although

the reverse proces s

of

listing

all the individuals in a

specific account is pretty

straightforward.

171

Retail

House-holding: You want to

find a group of people who

comprise a family, each

of

whom is

your customer. When you correctly

identify the household, you

can communicate

coherently

with its members. At that

time you can identify the

overall needs of the

household and

suggest an

effective consolidation or expansion of

products. This process is

called "cross-selling."

Cross-selling

your own customer base is

recognized as one of the

most effective ways to

increase

sales.

Combining

Information Systems after an Acquisition:

An

increasingly common MIS

problem

is merging

customer lists and product

lists from incompatible information

systems after an

acquisition.

Organizationally, it may be easy to imagine combining

staffs and moving

everything

to the

corporate system after an acquisition,

but what do you do with the

data itself? Sometimes

the

customer list is the most

valuable asset of the

acquired organization.

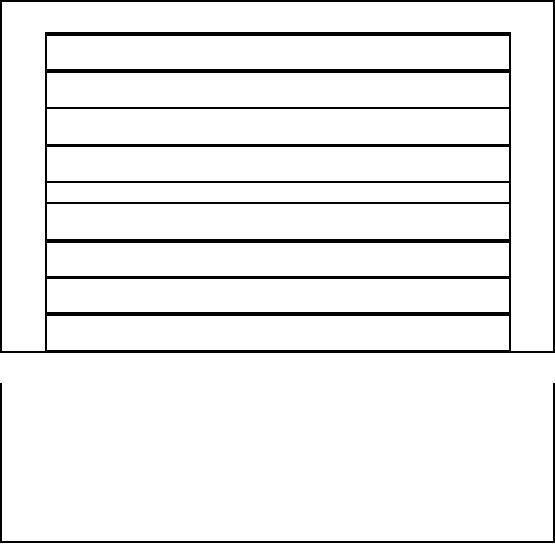

Characteristics

or Dimensions of Data

Quality

Data

Quality

Definition

Characteristic

Accuracy

Qualitatively

assessing lack of error,

high accuracy

corresponding to

small error.

Completeness

The

degree to which values are

present in the attributes

that

require

them.

Consistency

A measure of

the degree to which a set of

data satisfies a set

of

constraints.

Timeliness

A measure of

how current or up to date the

data is.

Uniqueness

The

state of being only one of

its kind or being without

an

equal or

parallel.

Interpretability

The

extent to which data is in

appropriate languages,

symbols,

and units, and the

definitions are

clear.

Accessibility

The

extent to which data is

available, or easily and

quickly

retrievable

Objectivity

The

extent to which data is

unbiased, unprejudiced, and

impartial

95%

accurate and 100%

complete

OR

100%

accurate and 95%

complete

Which is

better?

Depends on

data quality (i)

tolerances,

the

(ii) corresponding application and

the (iii) cost of achieving

that data quality vs. the

(iv)

business

value.

172

Table of Contents:

- Need of Data Warehousing

- Why a DWH, Warehousing

- The Basic Concept of Data Warehousing

- Classical SDLC and DWH SDLC, CLDS, Online Transaction Processing

- Types of Data Warehouses: Financial, Telecommunication, Insurance, Human Resource

- Normalization: Anomalies, 1NF, 2NF, INSERT, UPDATE, DELETE

- De-Normalization: Balance between Normalization and De-Normalization

- DeNormalization Techniques: Splitting Tables, Horizontal splitting, Vertical Splitting, Pre-Joining Tables, Adding Redundant Columns, Derived Attributes

- Issues of De-Normalization: Storage, Performance, Maintenance, Ease-of-use

- Online Analytical Processing OLAP: DWH and OLAP, OLTP

- OLAP Implementations: MOLAP, ROLAP, HOLAP, DOLAP

- ROLAP: Relational Database, ROLAP cube, Issues

- Dimensional Modeling DM: ER modeling, The Paradox, ER vs. DM,

- Process of Dimensional Modeling: Four Step: Choose Business Process, Grain, Facts, Dimensions

- Issues of Dimensional Modeling: Additive vs Non-Additive facts, Classification of Aggregation Functions

- Extract Transform Load ETL: ETL Cycle, Processing, Data Extraction, Data Transformation

- Issues of ETL: Diversity in source systems and platforms

- Issues of ETL: legacy data, Web scrapping, data quality, ETL vs ELT

- ETL Detail: Data Cleansing: data scrubbing, Dirty Data, Lexical Errors, Irregularities, Integrity Constraint Violation, Duplication

- Data Duplication Elimination and BSN Method: Record linkage, Merge, purge, Entity reconciliation, List washing and data cleansing

- Introduction to Data Quality Management: Intrinsic, Realistic, Orr’s Laws of Data Quality, TQM

- DQM: Quantifying Data Quality: Free-of-error, Completeness, Consistency, Ratios

- Total DQM: TDQM in a DWH, Data Quality Management Process

- Need for Speed: Parallelism: Scalability, Terminology, Parallelization OLTP Vs DSS

- Need for Speed: Hardware Techniques: Data Parallelism Concept

- Conventional Indexing Techniques: Concept, Goals, Dense Index, Sparse Index

- Special Indexing Techniques: Inverted, Bit map, Cluster, Join indexes

- Join Techniques: Nested loop, Sort Merge, Hash based join

- Data mining (DM): Knowledge Discovery in Databases KDD

- Data Mining: CLASSIFICATION, ESTIMATION, PREDICTION, CLUSTERING,

- Data Structures, types of Data Mining, Min-Max Distance, One-way, K-Means Clustering

- DWH Lifecycle: Data-Driven, Goal-Driven, User-Driven Methodologies

- DWH Implementation: Goal Driven Approach

- DWH Implementation: Goal Driven Approach

- DWH Life Cycle: Pitfalls, Mistakes, Tips

- Course Project

- Contents of Project Reports

- Case Study: Agri-Data Warehouse

- Web Warehousing: Drawbacks of traditional web sear ches, web search, Web traffic record: Log files

- Web Warehousing: Issues, Time-contiguous Log Entries, Transient Cookies, SSL, session ID Ping-pong, Persistent Cookies

- Data Transfer Service (DTS)

- Lab Data Set: Multi -Campus University

- Extracting Data Using Wizard

- Data Profiling