|

Chapter

9

Software

Quality: The Key

to

Successful

Software Engineering

Introduction

The

overall software quality

averages for the United

States have

scarcely

changed since 1979. Although

national data is flat for

quality,

a

few companies have made

major improvements. These

happen to be

companies

that measure quality because

they define quality in such

a

way

that both prediction and

measurement are

possible.

The

same companies also use

full sets of defect removal

activities that

include

inspections and static

analysis as well as testing.

Defect preven-

tion

methods such as joint

application design (JAD) and

development

methods

that focus on quality such

as Team Software Process

(TSP)

are

also used, once the

importance of quality to successful

software

engineering

is realized.

Historically,

large software projects

spend more time and

effort on

finding

and fixing bugs than on

any other activity. Because

software

defect

removal efficiency only

averages about 85 percent,

the major

costs

of software maintenance are

finding and fixing bugs

accidentally

released

to customers.

When

development defect removal is

added to maintenance

defect

removal,

the major cost driver

for total cost of ownership

(TCO) is that

of

defect removal. Between 30

percent and 50 percent of

every dollar

ever

spent on software has gone to

finding and fixing

bugs.

When

software projects run late

and exceed their budgets, a

main

reason

is excessive defect levels,

which slow down testing

and force

applications

into delays and costly

overruns.

555

556

Chapter

Nine

When

software projects are

cancelled and end up in

court for breach

of

contract, excessive defect

levels, inadequate defect

removal, and poor

quality

measures are associated with

every case.

Given

the fact that software

defect removal costs have

been the pri-

mary

cost driver for all

major software projects for

the past 50 years, it

is

surprising that so little is

known about software

quality.

There

are dozens of books about

software quality and

testing, but very

few

of these books actually

contain solid and reliable

quantified data

about

basic topics such

as:

1.

How many bugs are going to

be present in specific new

software

applications?

2.

How many bugs are likely to

be present in legacy software

applica-

tions?

3.

How can software quality be

predicted and

measured?

4.

How effective are ISO standards in

improving quality?

5.

How effective are software

quality assurance organizations

in

improving

quality?

6.

How effective is software quality

assurance certification for

improv-

ing

quality?

7.

How effective is Six Sigma for

improving quality?

8.

How effective is quality function

deployment (QFD) for

improving

quality?

9.

How effective are the higher

levels of the CMMI in

improving

quality?

10.

How effective are the forms

of Agile development in

improving

quality?

11.

How effective is the Rational

Unified Process (RUP) in

improving

quality?

12.

How effective is the Team

Software Process (TSP) in

improving

quality?

13.

How effective are the ITIL

methods in improving

quality?

14.

How effective is service-oriented

architecture (SOA) for

improving

quality?

15.

How effective are certified

reusable components for

improving

quality?

16.

How many bugs can be

eliminated by inspections?

17.

How many bugs can be

eliminated by static

analysis?

18.

How many bugs can be

eliminated by testing?

Software

Quality: The Key to

Successful Software

Engineering

557

19.

How many different kinds of

testing are needed?

20.

How many test personnel are

needed?

21.

How effective are test

specialists compared with

developers?

22.

How effective is automated

testing?

23.

How many test cases are

needed for applications of

various sizes?

24.

How effective is test certification in

improving performance?

25.

How many bug repairs will

themselves include new

bugs?

26.

How many bugs will get

delivered to users?

27.

How much does it cost to

improve software

quality?

28.

How long does it take to

improve software

quality?

29.

How much will we save from

improving software

quality?

30.

How much is the return on

investment (ROI) for better

software

quality?

This

purpose of this chapter is to

show the quantified results

of every

major

form of quality assurance

activity, inspection stage, static

analysis,

and

testing stage on the

delivered defect levels of

software applications.

Defect

removal comes in "private"

and "public" forms. The

private

forms

of defect removal include

desk checking, static

analysis, and unit

testing.

They are also covered in

Chapter 8, because they

concentrate

on

code defects, and that

chapter deals with programming

and code

development.

The

public forms of defect

removal include formal

inspections, static

analysis

if run by someone other than

the software engineer who

wrote

the

code, and many kinds of

testing carried out by test

specialists rather

than

the developers.

Both

private and public forms of

defect removal are

important, but

it

is harder to get data on the

private forms because they

usually occur

with

no one else being present

other than the person

who is doing

the

desk checking or unit

testing. As pointed out in

Chapter 8, IBM

used

volunteers to record defects

found via private removal

activities.

Some

development methods such as

Watts Humphrey's Team

Software

Process

(TSP) and Personal Software

Process (PSP) also record

private

defect

removal.

This

chapter will also explain

how to predict the number of

bugs or

defects

that might occur, and

how to predict defect

removal efficiency

levels.

Not only code bugs, but

also bugs or defects in

requirements,

design,

and documents need to be

predicted. In addition, new

bugs acci-

dentally

included in bug repairs need

to be predicted. These are

called

"bad

fixes." Finally, there are

also bugs or errors in test

cases them-

selves,

and these need to be

predicted, too.

558

Chapter

Nine

This

chapter will discuss the

best ways of measuring

quality and will

caution

against hazardous metrics

such as "cost per defect"

and "lines

of

code," which distort results

and conceal the real

facts of software

quality.

In this chapter, several

critical software quality

topics will be

discussed:

Defining

Software Quality

■

Predicting

Software Quality

■

Measuring

Software Quality

■

Software

Defect Prevention

■

Software

Defect Removal

■

Specialists

in Software Quality

■

The

Economic Value of Software

Quality

■

Software

quality is the key to

successful software

engineering.

Software

has long been troubled by

excessive numbers of

software

defects

both during development and

after release. Technologies

are

available

that can reduce software

defects and improve quality

by sig-

nificant

amounts.

Carefully

planning and selecting an

effective combination of

defect

prevention

and defect removal

activities can shorten

software develop-

ment

schedules, lower software

development costs, significantly

reduce

maintenance

and customer support costs,

and improve both

customer

satisfaction

and employee morale at the

same time. Improving

software

quality

has the highest return on

investment of any current

form of

software

process improvement.

As

the recession continues,

every company is anxious to

lower both

software

development and software

maintenance costs. Improving

soft-

ware

quality will assist in improving

software economics more than

any

other

available technology.

Defining

Software Quality

A

good definition for software

quality is fairly difficult to

achieve. There

are

many different definitions

published in the software

literature.

Unfortunately,

some of the published

definitions for quality are

either

abstract

or off the mark. A workable

definition of software quality

needs

to

have six fundamental

features:

1.

Quality should be predictable

before a software application

starts.

2.

Quality needs to encompass

all deliverables and not

just the code.

3.

Quality should be measurable

during development.

Software

Quality: The Key to

Successful Software

Engineering

559

4.

Quality should be measurable

after release to

customers.

5.

Quality should be apparent to

customers and recognized by

them.

6.

Quality should continue

after release, during

maintenance.

Here

are some of the published

definitions for quality, and

explana-

tions

of why some of them don't

seem to conform to the six

criteria just

listed.

Quality

Definition 1: "Quality

means

conformance

to requirements."

There

are several problems with

this definition, but the

major problem

is

that requirements errors or

bugs are numerous and

severe. Errors in

requirements

constitute about 20 percent of

total software defects

and

are

responsible for more than 35

percent of high-severity

defects.

Defining

quality as conformance to a major

source of error is

circular

reasoning,

and therefore this must be

considered to be a flawed

and

unworkable

definition. Obviously, a workable

definition for quality

has

to

include errors in requirements

themselves.

Don't

forget that the famous Y2K

problem originated as a specific

user

requirement

and not as a coding bug.

Many software engineers

warned

clients

and managers that limiting

date fields to two digits

would cause

problems,

but their warnings were

ignored or rejected

outright.

The

author once worked (briefly) as an

expert witness in a

lawsuit

where

a company attempted to sue an

outsource vendor for using

two-

digit

date fields in a software

application developed under

contract.

During

the discovery phase, it was

revealed that the vendor

cautioned

the

client that two-digit date

fields were hazardous, but

the client

rejected

the advice and insisted

that the Y2K problem be

included in

the

application. In fact, the

client's own internal

standards mandated

two-digit

date fields. Needless to

say, the client dropped

the suit when it

became

evident that they themselves

were the cause of the

problem. The

case

illustrates that "user

requirements" are often

wrong and sometimes

even

dangerous or "toxic."

It

also illustrates another

point. Neither the corporate

executives nor

the

legal department of the

plaintiff knew that the Y2K

problem had

been

caused by their own policies

and practices. Obviously,

there is a

need

for better governance of

software from the top

when problems such

as

this are not understood by

corporate executives.

Using

modern terminology from the

recession, it is necessary to

remove

"toxic requirements" before

conformance can be safe. The

defi-

nition

of quality as "conformance to

requirements" does not lead

to any

significant

quality improvements over

time. No more requirements

are

being

met in 2009 than in

1979.

560

Chapter

Nine

If

software engineering is to become a

true profession rather than

an

art

form, software engineers

have a responsibility to help

customers

define

requirements in a thorough and

effective manner. It is the

job

of

a professional software engineer to

insist on effective

requirements

methods

such as joint application

design (JAD), quality

function deploy-

ment

(QFD), and requirements

inspections.

Far

too often the literature on

software quality is passive

and makes

the

incorrect assumption that

users will be 100 percent

effective in

identifying

requirements. This is a dangerous

assumption. User

require-

ments

are never complete and

they are often wrong.

For a software

project

to succeed, requirements need to be

gathered and analyzed

in

a

professional manner, and

software engineering is the

profession that

should

know how to do this

well.

It

should be the responsibility of

the software engineers to

insist that

proper

requirements methods be used.

These include joint

application

design

(JAD), quality function

deployment (QFD), and

requirements

inspections.

Other methods that benefit

requirements, such as

embedded

users

or use-cases, might also be recommended.

The users themselves

are

not software engineers and

cannot be expected to know

optimal

ways

of expressing and analyzing

requirements. Ensuring that

require-

ments

collection and analysis are

at state-of-the-art levels devolves

to

the

software engineering

team.

Once

user requirements have been

collected and analyzed, then

con-

formance

to them should of course

occur. However, before

conformance

can

be safe and effective,

dangerous or toxic requirements

have to be

weeded

out, excess and superfluous

requirements should be

pointed

out

to the users, and potential

gaps that will cause

creeping require-

ments

should be identified and

also quantified. The users

themselves

will

need professional assistance

from the software

engineering team,

who

should not be passive

bystanders for requirements

gathering and

analysis.

Unfortunately,

requirements bugs cannot be

removed by ordinary

testing.

If requirements bugs are not

prevented from occurring, or

not

removed

via formal inspections, test

cases that are constructed

from the

requirements

will confirm the errors and

not find them. (This is

why

years

of software testing never

found and removed the Y2K

problem.)

A

second problem with this

definition is that it is not

predictable

during

development. Conformance to requirements

can be measured

after

the fact, but that is

too late for cost-effective

recovery.

A

third problem with this

definition is that for

brand-new kinds of

innovative

applications, there may not

be any users other than

the

original

inventor. Consider the

history of successful software

innovation

such

as the APL programming language,

the first spreadsheet, and

the

early

web search engine that

later became Google.

Software

Quality: The Key to

Successful Software

Engineering

561

These

innovative applications were

all created by inventors to

solve

problems

that they themselves wanted

to solve. They were not

created

based

on the normal concept of

"user requirements." Until

prototypes

were

developed, other people

seldom even realized how

valuable the

inventions

would be. Therefore, "user

requirements" are not

completely

relevant

to brand-new inventions until

after they have been

revealed

to

the public.

Given

the fact that software

requirements grow and change

at mea-

sured

rates of 1 percent to more

than 2 percent every

calendar month

during

the subsequent design and

coding phases, it is apparent

that

achieving

a full understanding of requirements is a

difficult task.

Software

requirements are important,

but the combination of

toxic

requirements,

missing requirements, and

excess requirements

makes

simplistic

definitions such as "quality

means conformance to

require-

ments"

hazardous to the software

industry.

Quality

Definition 2: "Quality

means

reliability,

portability, and many other

-ilities."

The

problem with defining quality as a

set of words ending with

ility

is

that

many of these factors are

neither predictable before

they occur nor

easily

measurable when they do

occur.

While

most of the -ility

words

are useful properties for

software

applications,

some don't seem to have

much to do with quality as we

would

consider the term for a

physical device such as an

automobile or

a

toaster. For example,

"portability" may be useful

for a software

vendor,

but

it does not seem to have

much relevance to quality in

the eyes of a

majority

of users.

The

use of -ility

words

to define quality does not

lead to quality

improvements

over time. In 2009, the

software industry is no better

in

terms

of many of these -ilities

than

it was in 1979. Using modern

lan-

guage

from the recession, many of

the -ilities

are

"subprime" definitions

that

don't prevent serious

quality failures. In fact,

using -ilities

rather

than

focusing on defect prevention

and removal slows down

progress

on

software quality

control.

Among

the many words that

are cited when using

this definition can

be

found (in alphabetical

order):

1.

Augmentability

2.

Compatibility

3.

Expandability

4.

Flexibility

5.

Interoperability

562

Chapter

Nine

6.

Maintainability

7.

Manageability

8.

Modifiability

9.

Operability

10.

Portability

11.

Reliability

12.

Scalability

13.

Survivability

14.

Understandability

15.

Usability

16.

Testability

17.

Traceability

18.

Verifiability

Of

the words on this list,

only a few such as

"reliability" and

"test-

ability"

seem to be relevant to quality as

viewed by users. The

other

terms

range from being obscure

(such as "survivability") to useful

but

irrelevant

(such as "portability"). Other

terms may be of interest to

the

vendor

or development team, but not

to customers (such as

"maintain-

ability").

The

-ility

words

seem to have an academic

origin because they

don't

really

address some of the

real-world quality issues

that bother cus-

tomers.

For example, none of these

terms addresses ease or

difficulty

of

reaching customer support to

get help when a bug is

noted or the

software

misbehaves. None of the

terms deals with the speed of

fixing

bugs

and providing the fix to

users in a timely

manner.

The

new Information Technology

Infrastructure Library (ITIL)

does a

much

better job of dealing with

issues of quality in the

eyes of users, such

as

customer support, incident

management, and defect

repairs intervals

than

does the standard literature

dealing with software

quality.

More

seriously, the list of

-ility

words

ignores two of the main

topics

that

have a major impact on

software quality when the

software is

finally

released to customers: (1) defect

potentials and (2) defect

removal

efficiency

levels.

The

term defect

potential refers

to the total quantity of

defects that

will

likely occur when designing

and building a software

application.

Defect

potentials include bugs or

defects in requirements, design,

code,

user

documents, and bad fixes or

secondary defects. The term

defect

removal

efficiency refers

to the percentage of defects

found by any

sequence

of inspection, static analysis,

and test stages.

Software

Quality: The Key to

Successful Software

Engineering

563

To

reach acceptable levels of

quality in the view of

customers, a com-

bination

of low defect potentials and

high defect removal

efficiency rates

(greater

than 95 percent) is needed.

The current U.S. average

for soft-

ware

quality is a defect potential of

about 5.0 bugs per

function point

coupled

with 85 percent defect removal

efficiency. This

combination

yields

a total of delivered defects of

about 0.75 per function

point, which

the

author regards as unprofessional

and unacceptable.

Defect

potentials need to drop below

2.5 per function point

and defect

removal

efficiency needs to average greater

than 95 percent for

software

engineering

to be taken seriously as a true

engineering discipline.

This

combination

would result in a delivered defect

total of only 0.125 defect

per

function

point or about one-sixth of

today's averages. Achieving or

exceed-

ing

this level of quality is

possible today in 2009, but

seldom achieved.

One

of the reasons that good

quality is not achieved as

widely as it

might

be is that concentrating on the

-ility

topics

rather than measuring

defects

and defect removal

efficiency leads to gaps and

failures in defect

removal

activities. In other words,

the -ilities

definitions

of quality are

a

distraction from serious

study of software defect

causes and the

best

methods

of preventing and removing

software defects.

Specific

levels of defect potentials

and defect removal

efficiency levels

could

be included in outsource agreements.

These would probably

be

more

effective than current

contracting practices for

quality, which are

often

nonexistent or merely insist on a

certain CMMI level.

If

software is released with excessive

quantities of defects so that

it

stops,

behaves erratically, or runs

slowly, it will soon be discovered

that

most

of the -ility

words

fall by the wayside.

Defect

quantities in released software

tend to be the paramount

qual-

ity

issue with users of software

applications, coupled with what

kinds of

corrective

actions the software vendor

will take once defects are

reported.

This

brings up a third and more

relevant definition of software

quality.

Quality

Definition 3: "Quality is the

absence

of

defects that would cause an

application to

stop

working or to produce incorrect

results."

A

software defect is a bug or

error that causes software

to either stop

operating

or to produce invalid or unacceptable

results. Using IBM's

severity

scale, defects have four

levels of severity:

Severity

1 means that the software

application does not work at

all.

■

Severity

2 means that major functions

are disabled or produce

incor-

■

rect

results.

Severity

3 means that there are

minor issues or minor

functions are

■

not

working.

Severity

4 means a cosmetic problem

that does not affect

operation.

■

564

Chapter

Nine

There

is some subjectivity with these

defect severity levels

because

they

are assigned by human

beings. Under the IBM model,

the ini-

tial

severity level is assigned

when the bug is first

reported, based on

symptoms

described by the customer or

user who reported the

defect.

However,

a final severity level is

assigned by the change team

when the

defect

is repaired.

This

definition of quality is one

favored by the author for

several reasons.

First,

defects can be predicted before they

occur and measured when

they

do

occur. Second, customer satisfaction

surveys for many software

applica-

tions

appear to correlate more

closely to delivered defect

levels than to any

other

factor. Third, many of the

-ility

factors

also correlate to defects, or to

the

absence of defects. For example,

reliability correlates exactly to

the

number

of defects found in software. Usability,

testability, traceability,

and

verifiability

also have indirect

correlations to software defect

levels.

Measuring

defect volumes and defect

severity levels and then

taking

effective

steps to reduce those

volumes via a combination of

defect pre-

vention

and defect removal

activities is the key to

successful software

engineering.

This

definition of software quality

does lead to quality

improvements

over

time. The companies that

measure defect potentials,

defect removal

efficiency

levels, and delivered

defects have improved both

factors by

significant

amounts. This definition of

quality supports process

improve-

ments,

predicting quality, measuring

quality, and customer

satisfaction

as

measured by surveys.

Therefore,

companies that measure

quality such as IBM,

Dovél

Technologies,

and AT&T have made

progress in quality control.

Also,

methods

that integrate defect

tracking and reporting such

as Team

Software

Process (TSP) have made

significant progress in

reducing

delivered

defects. This is also true

for some open-source

applications

that

have added static-analysis to

their suite of defect

removal tools.

Defect

and removal efficiency

measures have been used to

validate

the

effectiveness of formal inspections,

show the impact of static

analy-

sis,

and fine-tune more than 15

kinds of testing. The

subjective mea-

sures

have no ability to deal with

such issues.

Every

software engineer and every

software project manager

should

be

trained in methods for

predicting software defects,

measuring soft-

ware

defects, preventing software

defects, and removing

software

defects.

Without knowledge of effective

quality and defect control,

soft-

ware

engineering is a hoax.

The

full definition of quality

suggested by the author

includes these

nine

factors:

1.

Quality implies low levels

of defects when software is

deployed,

ideally

approaching zero

defects.

Software

Quality: The Key to

Successful Software

Engineering

565

2.

Quality implies high

reliability, or being able to

run without stop-

page

or strange and unexpected

results or sluggish

performance.

3.

Quality implies high levels

of user satisfaction when

users are sur-

veyed

about software applications

and its features.

4.

Quality implies a feature

set that meets the

normal operational

needs

of a majority of customers or

users.

5.

Quality implies a code

structure and comment

density that minimize

bad

fixes or accidentally inserting

new bugs when attempting to

repair

old

bugs. This same structure will

facilitate adding new

features.

6.

Quality implies effective

customer support when

problems do occur,

with

minimal difficulty for

customers in contacting the

support

team

and getting

assistance.

7.

Quality implies rapid

repairs of known defects,

and especially so

for

high-severity defects.

8.

Quality should be supported by

meaningful guarantees and

war-

ranties

offered by software developers to

software users.

9.

Effective definitions of quality

should lead to quality

improvements.

This

means that quality needs to

be defined rigorously enough so

that

both improvements and

degradations can be identified,

and

also

averages. If a definition for

quality cannot show changes

or

improvements,

then it is of very limited

value.

The

6th, 7th, 8th, and

9th of these quality issues

tend to be sparsely

covered

by the literature on software

quality, other than the

new ITIL

books.

Unfortunately, the ITIL coverage is used

only for internal

software

applications

and is essentially ignored by

commercial software

vendors.

The

definition of quality as an absence of

defects, combined with

sup-

plemental

topics such as ease of

customer support and

maintenance

speed,

captures the essence of quality in

the view of many

software

users

and customers.

Consider

how the three definitions of

quality discussed in this

chapter

might

relate to a well-known software

product such as Microsoft

Vista.

Vista

has been selected as an

example because it is one of the

best-

known

large software applications in

the world, and therefore a

good

test

bed for trying out

various quality

definitions.

Applying

Definition 1 to Vista:

"Quality

means

conformance to requirements."

The

first definition would be

hard to use for Vista,

since no ordinary

cus-

tomers

were asked what features

they wanted in the operating

system,

although

focus groups were probably

used at some point.

566

Chapter

Nine

If

you compare Vista with XP,

Leopard, or Linux, it seems to

include

a

superabundance of features and

functions, many of which

were

neither

requested nor ever used by a

majority of users. One

topic

that

the software engineering

literature does not cover

well, or at

all,

is that of overstuffing applications with

unnecessary and

useless

features.

Most

people know that ordinary

requirements usually omit

about

20

percent of functions that

users want. However, not

many people

know

that for commercial software

put out by companies such

as

Microsoft,

Symantec, Computer Associates,

and the like,

applications

may

have more than 40 percent

features that customers

don't want and

never

use.

Feature

stuffing is essentially a competitive

move to either

imitate

what

competitors do, or to attempt to pull

ahead of smaller

competi-

tors

by providing hundreds of costly

but marginal features that

small

competitors

could not imitate. In either

case, feature stuffing is

not a

satisfactory

conformance to user

requirements.

Further,

certain basic features such

as security and

performance,

which

users of operating systems do

appreciate, are not

particularly

well

embodied in Vista.

The

bottom line is that defining

quality as conformance to

require-

ments

is almost useless for

applications with greater than 1

million

users

such as Vista, because it is impossible

to know what such a

large

group

will want or not

want.

Also,

users seldom are able to

articulate requirements in an

effective

manner,

so it is the job of professional

software engineers to help

users

in

defining requirements with care

and accuracy. Too often

the software

literature

assumes that software

engineers are only passive

observers of

user

requirements, when in fact,

software engineers should be

playing

the

role of physicians who are

diagnosing medical conditions in

order

to

prescribe effective

therapies.

Physicians

don't just passively ask

patients what the problem is

and

what

kind of medicine they want

to take. Our job as software

engineers

is

to have professional knowledge

about effective requirement

gather-

ing

and analysis methods (i.e.,

like medical diagnostic

tests) and to also

know

what kinds of applications

might provide effective

"therapies" for

user

needs.

Passively

waiting for users to define

requirements without

assisting

them

in using joint application

design (JAD) or quality

function deploy-

ment

(QFD) or data mining of

legacy applications is unprofessional

on

the

part of the software

engineering community. Users

are not trained

in

requirements definition, so we need to

step up to the task of

assist-

ing

them.

Software

Quality: The Key to

Successful Software

Engineering

567

Applying

Definition 2 to Vista:

"Quality

means

adherence to -ility

terms."

When

Vista is judged by matching

its features against the

list of -ility

terms

shown earlier, it can be seen

how abstract and difficult

to apply

such

a list really is

1.

Augmentability

Ambiguous

and difficult to apply to

Vista

2.

Compatibility

Poor

for Vista; many old

applications don't

work

3.

Expandability

Applicable

to Vista and fairly

good

4.

Flexibility

Ambiguous

and difficult to apply to

Vista

5.

Interoperability

Ambiguous

and difficult to apply to

Vista

6.

Maintainability

Unknown

to users but probably poor

for Vista

7.

Manageability

Ambiguous

and difficult to apply to

Vista

8.

Modifiability

Unknown

to users but probably poor

for Vista

9.

Operability

Ambiguous

and difficult to apply to

Vista

10.

Portability

Poor

for Vista

11.

Reliability

Originally

poor for Vista but

improving

12.

Scalability

Marginal

for Vista

13.

Survivability

Ambiguous

and difficult to apply to

Vista

14.

Understandability

Poor

for Vista

15.

Usability

Asserted

to be good for Vista, but

questionable

16.

Testability

Poor

for Vista: complexity far

too high

17.

Traceability

Poor

for Vista: complexity far

too high

18.

Verifiability

Ambiguous

and difficult to apply to

Vista

The

bottom line is that more

than half of the -ility

words

are difficult

or

ambiguous to apply to Vista or

any other commercial

software appli-

cation.

Of the ones that can be

applied to Vista, the

application does not

seem

to have satisfied any of

them but expandability and

usability.

Many

of the -ility

words

cannot be predicted nor can

they be mea-

sured.

Worse, even if they could be

predicted and measured, they

are of

marginal

interest in terms of serious

quality control.

Applying

Definition 3 to Vista: "Quality

means

an

absence of defects, plus

corollary factors."

Released

defects can and should be

counted for every software

applica-

tion.

Other related topics such as

ease of reporting defects

and speed of

repairing

defects should also be

measured.

Unfortunately,

for commercial software, not

all of these nine

topics

can

be evaluated. Microsoft together with

many other software

vendors

does

not publish data on bad-fix

injections or even on total

numbers

568

Chapter

Nine

of

bugs reported. However, six

of the eight factors can be

evaluated by

means

of journal articles and

limited Microsoft

data.

1.

Vista was released with

hundreds or thousands of defects,

although

Microsoft

will not provide the exact

number of defects found

and

reported

by users.

2.

At first Vista was not

very reliable, but achieved

acceptable reli-

ability

after about a year of usage.

Microsoft does not report

data

on

mean time to failure or

other measures of

reliability.

3.

Vista never achieved high

levels of user satisfaction

compared with

XP.

The major sources of

dissatisfaction include lack of

printer driv-

ers,

poor compatibility with older

applications, excessive

resource

usage,

and sluggish performance on

anything short of

high-end

computer

chips and lots of

memory.

4.

The feature set of Vista

has been noted as adequate

in customer

surveys,

other than excessive

security vulnerabilities.

5.

Microsoft does not release

statistics on bad-fix injections or on

num-

bers

of defect reports, so this

factor cannot be known by

the general

public.

6.

Microsoft customer support is

marginal and troublesome to

access

and

use. This is a common

failing of many software

vendors.

7.

Some known bugs have

remained in Microsoft Vista

for several

years.

Microsoft is marginally adequate in

defect repair speed.

8.

There is no effective warranty

for Vista (or for

other commercial

applications).

Microsoft's end-user license

agreement (EULA)

absolves

Microsoft of any liabilities

other than replacing a

defec-

tive

disk.

9.

Microsoft's new operating

system is not yet available

as this book

is

published, so it is not possible to

know if Microsoft has

used

methods

that will yield better

quality than Vista. However,

since

Microsoft

does have substantial

internal defect tracking and

quality

assurance

methods, hopefully quality will be

better. Microsoft has

shown

some improvements in quality

over time.

Based

on this pattern of analysis

for the nine factors, it

cannot be said

that

Vista is a high-quality application

under any of the

definitions. Of

the

three major definitions,

defining quality as conformance to

require-

ments

is almost impossible to use with

Vista because with millions of

users,

nobody can define what

everybody wants.

The

second definition of quality as a

string of -ility

words

is difficult

to

apply, and many are

irrelevant. These words

might be marginally

useful

for small internal

applications, but are not

particularly helpful

Software

Quality: The Key to

Successful Software

Engineering

569

for

commercial software. Also,

many key quality issues

such as cus-

tomer

support and maintenance

repair times are not

found in any of

the

-ility

words.

The

third definition that

centers on defects, customer

support, defect

repairs,

and better warranties seems

to be the most relevant. The

third

also

has the advantage of being

both predictable and

measurable, which

the

first two lack.

Given

the high costs of commercial

software, the marginal or

use-

less

warranties of commercial software,

and the poor customer

sup-

port

offered by commercial software

vendors, the author would

favor

mandatory

defect reporting that

required commercial vendors

such as

Microsoft

to produce data on defects

reported by customers, sorted

by

severity

levels.

Mandatory

defect reporting is already a

requirement for many

prod-

ucts

that affect human life or

safety, such as medicines,

aircraft engines,

automobiles,

and many other consumer

products. Mandatory

reporting

of

business and financial

information is also required.

Software affects

human

life and safety in critical

ways, and it affects

business operations

in

critical ways, but to date

software has been exempt

from serious study

due

to the lack of any mandate

for measuring and reporting

released

defect

levels.

Somewhat

surprisingly, the open-source

software community

appears

to

be pulling ahead of old-line

commercial software vendors in

terms

of

measuring and reporting

defects. Many open-source

companies have

added

defect tracking and

static-analysis tools to their

quality arsenal,

and

are making data available to

customers that is not

available from

many

commercial software

vendors.

The

author would also favor a

"lemon law" for commercial

software

similar

to the lemon law for

automobiles. If serious defects

occur that

users

cannot get repaired when

making good-faith effort to

resolve the

situation

with vendors, vendors should be

required to return the

full

purchase

or lease price of the

offending software

application.

A

form of lemon law might also

be applied to outsource

contracts,

except

the litigation already

provides relief for

outsource failures

that

cannot

be used against commercial

software vendors due to

their one-

sided

EULA agreements, which disclaim

any responsibility for

quality

other

than media

replacement.

No

doubt software vendors would

object to both mandatory

defect

tracking

and also to a lemon law. But

shrewd and farsighted

vendors

would

soon perceive that both

topics offer significant

competitive advan-

tages

to software companies that

know how to control quality.

Since

high-quality

software is also cheaper and

faster to develop and

has

lower

maintenance costs than buggy

software, there are even

more

important

economic advantages for

shrewd vendors.

570

Chapter

Nine

The

author hypothesizes that a

combination of mandatory

defect

reporting

by software vendors plus a

lemon law would have the

effect

of

improving software quality by

about 50 percent every five

years for

perhaps

a 20-year period.

Software

quality needs to be taken

much more seriously than it

has

been.

Now that the recession is

expanding, better software

quality con-

trol

is one of the most effective

strategies for lowering

software costs.

But

effective quality control

depends on better measures of

quality

and

on proven combinations of defect

prevention and defect

removal

activities.

Quality

prediction, quality measurement,

better defect

prevention,

and

better defect removal are on

the critical path for

advancing software

engineering

to the status of a true

engineering discipline instead

of

a

craft or art form as it is

today in 2009.

Defining

and Predicting Software

Defects

If

delivered defects are the

main quality problem for

software, it is

important

to know what causes these

defects, so that they can be

pre-

vented

from occurring or removed

before delivery.

The

software quality literature

includes a great deal of

pedantic

bickering

about various terms such as

"fault," "error," "bug,"

"defect"

and

many other terms. For

this book, if software stops

working, won't

load,

operates erratically, or produces

incorrect results due to

mis-

takes

in its own code, then that

is called a "defect." (This

same defi-

nition

has been used in 14 of the

author's previous books and

also in

more

than 30 journal articles.

The author's first use of

this definition

started

in 1978.)

However,

in the modern world, the

same set of problems can

occur

without

the developers or the code

being the cause. Software

infected

by

a virus or spyware can also

stop working, refuse to

load, operate

erratically,

and produce incorrect

results. In today's world,

some defect

reports

may well be caused by

outside attacks.

Attacks

on software from hackers are

not the same as

self-inflicted

defects,

although successful attacks do

imply security

vulnerabilities.

In

this book and the

author's previous books,

software defects have

five

main points of origin:

1.

Requirements

2.

Design

3.

Code

4.

User documents

5.

Bad fixes (new defects

due to repairs of older

defects)

Software

Quality: The Key to

Successful Software

Engineering

571

Because

the author worked for IBM

when starting research on

quality,

the

IBM severity scale for

classifying defect severity

levels is used in

this

book

and the author's previous

books. There are four

severity

levels:

Severity

1: Software does not operate

at all

■

Severity

2: Major features disabled or

incorrect

■

Severity

3: Minor features disabled or

incorrect

■

Severity

4: Cosmetic error that does

not affect operation

■

There

are other methods of

classifying severity levels,

but these four

are

the most common due to IBM

introducing them in the

1960s, so they

became

a de facto standard.

Software

defects have seven kinds of

causes,

with

the major causes

including

Errors

of omission:

Something

needed was accidentally left

out

Errors

of commission:

Something

needed is incorrect

Errors

of ambiguity:

Something

is interpreted in several

ways

Errors

of performance:

Some

routines are too slow to be

useful

Errors

of security:

Security

vulnerabilities allow attacks

from outside

Errors

of excess:

Irrelevant

code and unneeded features

are included

Errors

of poor removal:

Defects

that should easily have

been found

These

seven causes occur with

different frequencies for

different

deliverables.

For paper documents such as

requirements and

design,

errors

of ambiguity are most

common, followed by errors of

omission.

For

source code, errors of

commission are most common,

followed by

errors

of performance and

security.

The

seventh category, "errors of poor

removal," would require

root-cause

analysis

for identification. The

implication is that the

defect was neither

subtle

nor hard to find, but

was missed because test

cases did not cover

the

code

segment or because of partial

inspections that overlooked

the defect.

In

a sense, all delivered defects

might be viewed as errors of

poor

removal,

but it is important to find

out why various kinds of

inspection,

static

analysis, or testing missed

obvious bugs. This category

should not

be

assigned for subtle defects,

but rather for obvious

defects that should

have

been found but for

some reason escaped to the

outside world.

The

main reason for including

errors of poor removal is to

encourage

more

study and research on the

effectiveness of various kinds of

defect

removal

operations. More solid data

is needed on the removal

efficiency

levels

of inspections, static analysis,

automatic testing, and all

forms of

manual

testing.

The

combination of defect origins,

defect severity, and defect

causes

provides

a useful taxonomy for

classifying defects for

statistical analysis

or

root-cause analysis. For

example, the Y2K problem was

cited earlier

572

Chapter

Nine

in

this chapter. In its most

common manifestation, the Y2K

problem

might

have this description using

the taxonomy just

discussed:

Y2K

origin:

Requirements

Y2K

severity:

Severity

2 major features

disabled

Y2K

primary cause:

Error

of commission

Y2K

secondary cause:

Error

of poor removal

Note

that this taxonomy allows

the use of primary and

secondary fac-

tors

since sometimes more than

one problem is behind having a

defect

in

software.

Note

also that the Y2K problem

did not have the

same severity for

every

application. An approximate distribution

of Y2K severity levels

for

several hundred applications

noted that the software

stopped in

about

15 percent of instances, which

are severity 1 problems; it

created

severity

2 problems in about 50 percent; it

created severity 3

problems

in

about 25 percent; and had no

operational consequences in about

10

percent

of the applications in the

sample.

To

know the origin of a defect,

some research is required.

Most defects

are

initially found because the

code stops working or

produces erratic

results.

But it is important to know if upstream

problems such as

requirements

or design issues are the

true cause. Root-cause

analysis

can

find the true causes of

software defects.

Several

other factors should be

included in a taxonomy for

tracking

defects.

These include whether a

reported defect is valid or

invalid.

(Invalid

defects are common and

fairly expensive, since they

still require

analysis

and a response.) Another

factor is whether a defect

report is

new

and unique, or merely a

duplicate of a prior defect

report.

For

testing and static analysis,

the category of "false

positives" needs

to

be included. A false

positive is

the mistaken identification of a

code

segment

that initially seems to be

incorrect, but which later

research

reveals

is actually correct.

A

third factor deals with

whether the repair team

can make the

same

problem

occur on their own systems,

or whether the defect was

caused

by

a unique configuration on the

client's system. When

defects cannot be

duplicated,

they were termed abeyant

defects by IBM, since

additional

information

needed to be collected to solve

the problem.

Adding

these additional topics to

the Y2K example would result

in

an

expanded taxonomy:

Y2K

origin:

Requirements

Y2K

validity:

Valid

defect report

Y2K

uniqueness:

Duplicate

(this problem was reported

millions of times)

Y2K

severity:

Severity

2 major features

disabled

Y2K

primary cause:

Error

of commission

Y2K

secondary cause:

Error

of poor removal

Software

Quality: The Key to

Successful Software

Engineering

573

When

defects are being counted or

predicted, it is useful to have

a

standard

metric for normalizing the

results. As discussed in Chapter

5,

there

are at least ten candidates

for such a normalizing

metric, including

function

points, story points,

use-case points, lines of

code, and so on.

In

this book and also in

the author's previous books,

the function

point

metric defined by the

International Function Point

Users Group

(IFPUG)

is used to quantify and

normalize data for both

defects and

productivity.

There

are several reasons for

using IFPUG function points.

The most

important

reason in terms of measuring

software defects is that

non-

code

defects in requirements, design,

and documents are major

defect

sources

and cannot be measured using

the older "lines of code"

metric.

Another

important reason is that all

of the major benchmark

data

collections

for productivity and quality

use function point metrics,

and

data

expressed via IFPUG function

points composes about 85

percent

of

all known benchmarks.

It

is not impossible to use

other metrics for

normalization, but if

results

are to be compared against

industry benchmarks such as

those

published

by the International Software

Benchmarking Standards

Group

(ISBSG), the IFPUG function

points are the most

convenient.

Later

in the discussion of defect

prediction, examples will be given

of

using

other metrics in addition to IFPUG

function points.

It

is interesting to combine the

origin, severity, and cause

factors to

examine

the approximate frequency of

each.

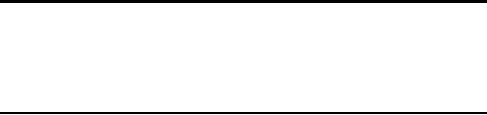

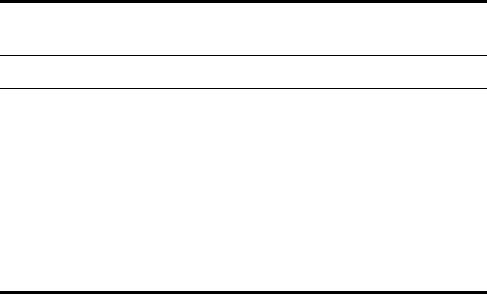

Table

9-1 shows the combination of

these factors for software

applica-

tions

during development. Therefore,

Table 9-1 shows defect

potentials,

or

the probable numbers of

defects that will be encountered

during

development

and after release. Only

severity 1 and severity 2

defects

are

shown in Table 9-1.

Data

on defect potentials is based on

long-range studies of defects

and

defect

removal efficiency carried

out by organizations such as

the IBM

Software

Quality Assurance groups,

which have been studying

software

quality

for more than 35

years.

TABLE

9-1

Overview

of Software Defect Potentials

Defect

Defects

per

Severity

1

Severity

2

Most

Frequent

Origins

Function

Point

Defects

Defects

Defect

Cause

Requirements

1.00

11.00%

15.00%

Omission

Design

1.25

15.00%

20.00%

Omission

Code

1.75

70.00%

57.00%

Commission

Documents

0.60

1.00%

1.00%

Ambiguity

Bad

fixes

0.40

3.00%

7.00%

Commission

TOTAL

5.00

100.00%

100.00%

Omission

574

Chapter

Nine

Other

corporations such as AT&T, Coverity,

Computer Aid Inc.

(CAI),

Dovél

Technologies, Motorola, Software

Productivity Research

(SPR),

Galorath

Associates, the David

Consulting Group, the

Quality and

Productivity

Management Group (QPMG),

Unisys, Microsoft, and

the

like,

also carry out long-range

studies of defects and

removal efficiency

levels.

Most

such studies are carried

out by corporations rather

than uni-

versities

because academia is not really

set up to carry out

longitudinal

studies

that may last more

than ten years.

While

coding bugs or coding

defects are the most

numerous during

development,

they are also the

easiest to find and to get

rid of. A

combination

of inspections, static analysis,

and testing can wipe

out

more

than 95 percent of coding

defects and sometimes top 99

percent.

Requirements

defects and bad fixes

are the toughest categories

of defect

to

eliminate.

Table

9-2 uses Table 9-1 as a

starting point, but shows

the latent

defects

that will still be present

when the software

application is deliv-

ered

to users. Table 9-2 shows

approximate U.S. averages

circa 2009.

Note

the variations in defect

removal efficiency by

origin.

It

is interesting that when the

software is delivered to clients,

require-

ments

defects are the most

numerous, primarily because they

are the

most

difficult to prevent and

also the most difficult to

find. Only formal

requirements-gathering

methods combined with formal

requirements

inspections

can improve the situation

for finding and removing

require-

ments

defects.

If

not prevented or removed,

both requirements bugs and

design bugs

eventually

find their way into

the code. These are

not coding bugs

per

se,

such as branching to a wrong

address, but more serious

and deep-

seated

kinds of bugs or

defects.

It

was noted earlier in this

chapter that requirements

defects cannot

be

found and removed by means

of testing. If a requirements defect

is

not

prevented or removed via

inspection, all test cases

created using the

requirements

will confirm the defect and

not identify it.

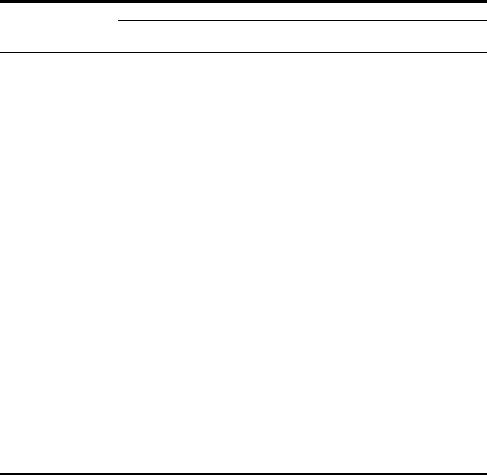

TABLE

9-2

Overview

of Delivered Software Defects

Defect

Defects

per

Removal

Delivered

Defects per

Most

Frequent

Origins

Function

Point

Efficiency

Function

Point

Defect

Cause

Requirements

1.00

70.00%

0.30

Commission

Design

1.25

85.00%

0.19

Commission

Code

1.75

95.00%

0.09

Commission

Documents

0.60

91.00%

0.05

Omission

Bad

fixes

0.40

70.00%

0.12

Commission

TOTAL

5.00

85.02%

0.75

Commission

Software

Quality: The Key to

Successful Software

Engineering

575

Since

Table 9-2 reflects

approximate U.S. averages,

the methods

assumed

are those of fairly careless

requirements gathering:

water-

fall

development, CMMI level 1, no formal

inspections of requirements,

design,

or code; no static analysis;

and using only five

forms of testing:

(1)

unit test, (2) new

function test, (3)

regression test, (4) system

test,

and

(5 )acceptance test.

Note

also that during

development, requirements will continue

to

grow

and change at rates of 1

percent to 2 percent every

calendar month.

These

changing requirements have

higher defect potentials

than the

original

requirements and lower

levels of defect removal

efficiency. This

is

yet another reason why

requirements defects cause

more problems

than

any other defect origin

point.

Software

requirements are the most

intractable source of

software

defects.

However, methods such as

joint application design

(JAD), qual-

ity

function deployment (QFD), Six

Sigma analysis, root-cause

analy-

sis,

embedding users with the

development team as practiced by

Agile

development,

prototypes, and the use of

formal requirements

inspec-

tions

can assist in bringing

requirements defects under

control.

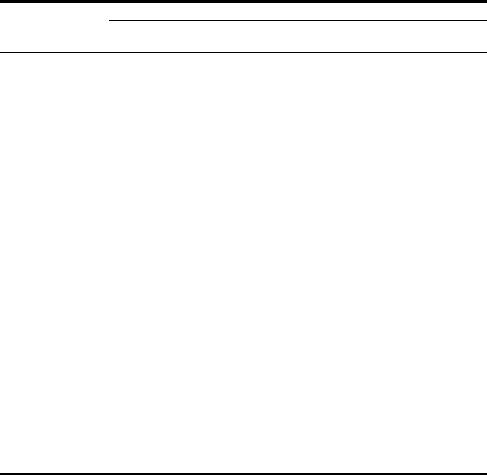

Table

9-3 shows what quality

might look like if an

optimal combina-

tion

of defect prevention and

defect removal activities

were utilized.

Table

9-3 assumes formal

requirements methods, rigorous

development

such

as practiced using the Team

Software Process (TSP) or

the higher

CMMI

levels, prototypes and JAD,

formal inspections of all

deliverables,

static

analysis of code, and a full

set of eight testing stages:

(1) unit

test,

(2) new function test,

(3) regression test, (4)

performance test, (5)

security

test, (6) usability test,

(7) system test, and

(8) acceptance test.

Table

9-3 also assumes a software

quality assurance (SQA)

group

and

rigorous reporting of software

defects starting with

requirements,

continuing

through inspections, static

analysis and testing, and

out

into

the field with multiple

years of customer-reported defects,

main-

tenance,

and enhancements. Accumulating

data such as that shown

in

Tables

9-1 through 9-3 requires

longitudinal data collection

that runs

for

many years.

TABLE

9-3

Optimal

Defect Prevention and Defect

Removal Activities

Defect

Defects

per

Removal

Delivered

Defects per Most

Frequent

Origins

Function

Point

Efficiency

Function

Point

Defect

Cause

Requirements

0.50

95.00%

0.03

Omission

Design

0.75

97.00%

0.02

Omission

Code

0.50

99.00%

0.01

Commission

Documents

0.40

96.00%

0.02

Omission

Bad

fixes

0.20

92.00%

0.02

Commission

TOTAL

2.35

96.40%

0.08

Omission

576

Chapter

Nine

This

combination has the effect

of cutting defect potentials by

more

than

50 percent and of raising

cumulative defect removal

efficiency from

today's

average of 85 percent up to more

than 96 percent.

It

might be possible to even

exceed the results shown in

Table 9-3,

but

doing so would require

additional methods such as

the availability

of

a full suite of certified

reusable materials.

Tables

9-2 and 9-3 are

oversimplifications of real-life results.

Defect

potentials

vary with the size of the

application and with other

factors.

Defect

removal efficiency levels

also vary with application

size. Bad-fix

injections

also vary by defect origins.

Both defect potentials and

defect

removal

efficiency levels vary by

methodology, by CMMI levels, and

by

other

factors as well. These will be

discussed later in the

section of this

chapter

dealing with defect

prediction.

Because

of the many definitions of

quality used by the

industry, it is

best

to start by showing what is

predictable and measurable

and what is

not.

To sort out the relevance of

the many quality

definitions, the

author

has

developed a 10-point scoring

method for software quality

factors.

If

a factor leads to improvement in

quality, its maximum score is

3.

■

If

a factor leads to improvement in

customer satisfaction, its

maxi-

■

mum

score is 3.

If

a factor leads to improvement in

team morale, its maximum

score

■

is

2.

If

a factor is predictable, its

maximum score is 1.

■

If

a factor is measurable, its

maximum score is 1.

■

The

total maximum score is

10.

■

The

lowest possible score is 0.

■

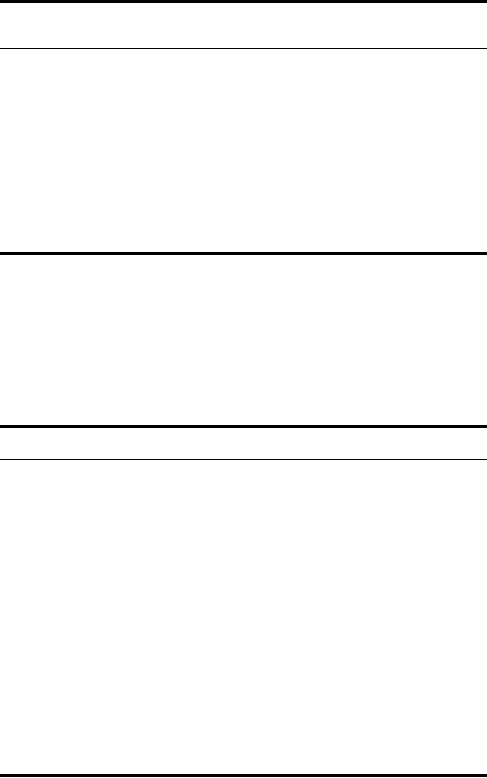

Table

9-4 lists all of the

quality factors discussed in

this chapter in

rank

order by using the scoring

factor just outlined. Table

9-4 shows

whether

a specific quality factor is

measurable and predictable,

and

also

the relevance of the factor

to quality as based on surveys of

soft-

ware

customers. It also includes a

weighted judgment as to

whether

the

factor has led to

improvements in quality among

the organizations

that

use it.

The

quality definitions with a score of 10

have been the most

effec-

tive

in leading to quality improvements

over time. As a rule, the

quality

definitions

scoring higher than 7 are

useful. However, the quality

defi-

nitions

that score below 5 have no

empirical data available

that shows

any

quality improvement at

all.

While

Table 9-4 is somewhat

subjective, at least it provides a

math-

ematical

basis for scoring the

relevance and importance of

the rather

Software

Quality: The Key to

Successful Software

Engineering

577

TABLE

9-4

Rank

Order of Quality Factors by

Importance to Quality

Measurable

Predictable

Relevance

to

Property?

Property?

Quality

Score

Best

Quality Definitions

Defect

potentials

Yes

Yes

Very

high

10.00

Defect

removal efficiency

Yes

Yes

Very

high

10.00

Defect

severity levels

Yes

Yes

Very

high

10.00

Defect

origins

Yes

Yes

Very

high

10.00

Reliability

Yes

Yes

Very

high

10.00

Good

Quality Definitions

Toxic

requirements

Yes

No

Very

high

9.50

Missing

requirements

Yes

No

Very

high

9.50

Requirements

conformance

Yes

No

Very

high

9.00

Excess

requirements

Yes

No

Medium

9.00

Usability

Yes

Yes

Very

high

8.00

Testability

Yes

Yes

High

8.00

Defect

causes

Yes

No

Very

high

8.00

Fair

Quality Definitions

Maintainability

Yes

Yes

High

7.00

Understandability

Yes

Yes

Medium

6.00

Traceability

Yes

No

Low

6.00

Modifiability

Yes

No

Medium

5.00

Verifiability

Yes

No

Medium

5.00

Poor

Quality Definitions

Portability

Yes

Yes

Low

4.00

Expandability

Yes

No

Low

3.00

Scalability

Yes

No

Low

2.00

Interoperability

Yes

No

Low

1.00

Survivability

Yes

No

Low

1.00

Augmentability

No

No

Low

0.00

Flexibility

No

No

Low

0.00

Manageability

No

No

Low

0.00

Operability

No

No

Low

0.00

vague

and ambiguous collection of

quality factors used by the

software

industry.

In essence, Table 9-4 makes

these points:

1.

Conformance to requirements is hazardous

unless incorrect,

toxic,

or

dangerous requirements are

weeded out. This definition

has not

demonstrated

any improvements in quality

for more than 30

years.

2.

Most of the -ility

quality

definitions are hard to

measure, and many

are

of marginal significance. Some

are not measurable either.

None

of

the -ility

words

tend to lead to tangible

quality gains.

578

Chapter

Nine

3.

Quantification of defect potentials

and defect removal

efficiency

levels

have had the greatest

impact on improving quality

and also

the

greatest impact on customer

satisfaction levels.

If

software engineering is to evolve

from a craft or art form

into a true

engineering

field, it is necessary to put

quality on a firm quantitative

basis

and to move away from

vague and subjective quality

definitions.

These

will still have a place, of